The example below is used for testing the label spreading algorithm using a dummy dataset (reference here: https://scikit-learn.org/stable/auto_examples/semi_supervised/plot_label_propagation_digits.html) before applying to my dataset.

import numpy as np

from sklearn import datasets

digits = datasets.load_digits()

rng = np.random.RandomState(2)

indices = np.arange(len(digits.data))

rng.shuffle(indices)

X = digits.data[indices[:340]]

y = digits.target[indices[:340]]

images = digits.images[indices[:340]]

tot_samples = len(y)

labeled_points = 40

indices = np.arange(tot_samples)

non_labeled_set = indices[labeled_points:]

# Shuffle

y_train = np.copy(y)

y_train[non_labeled_set] = -1

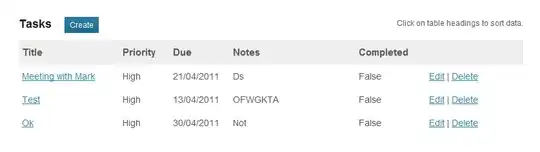

I would like to apply label propagation to an existing dataset that I have and has the following fields:

User1 User2 Class Weight

A1 B1 1 2.1

A1 C1 1 3.3

A2 D3 -1 2.1

C3 C1 0 2.5

D1 A1 1 1.3

C3 D1 -1 2.5

A2 A4 -1 1.5

Class is a property of User1. Nodes are A1, A2, B1, C1, C3, D1, D3, A4 but only A1, A2, C3 and D1 have labels. The others (B1, C1, D3, A4) do not have it. I would like to predict their label using label propagation algorithm. Can someone explain me how to apply the above code in my case, as the challenge is in determining multiple labels? I think it should still work, even if I am considering a multi-class sample of data.

As per the algorithm considered, I think that it needs to propagate labels to neighboring unlabeled nodes according to the weight. This step should be repeated for many times until, eventually, the labels on the unlabeled nodes will reach an equilibrium (that will be the prediction for these nodes).

I would expect the following output:

B1: 1

C1: 0

D3: -1

A4: -1