I am new to the xgboost package on python and was looking for online sources to understand the value of the F score on Feature Importance when using xgboost.

I understand from other sources that feature importance plot = "gain" below:

‘Gain’ is the improvement in accuracy brought by a feature to the branches it is on. The idea is that before adding a new split on a feature X to the branch there was some wrongly classified elements, after adding the split on this feature, there are two new branches, and each of these branches is more accurate (one branch saying if your observation is on this branch then it should be classified as 1, and the other branch saying the exact opposite)

But I would like to know if there is any meaning to the exact number that we see in the feature importance plot. For instance, what does the 1210.94 mean?

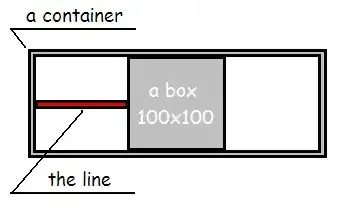

Here is an example of output for reference.

Thank you!