I have a pyspark dataframe of 13M rows and I would like to convert it to a pandas dataframe. The dataframe will then be resampled for further analysis at various frequencies such as 1sec, 1min, 10 mins depending on other parameters.

From literature [1, 2] I have found that using either of the following lines can speed up conversion between pyspark to pandas dataframe:

spark.conf.set("spark.sql.execution.arrow.pyspark.enabled", "true")

spark.conf.set("spark.sql.execution.arrow.enabled", "true")

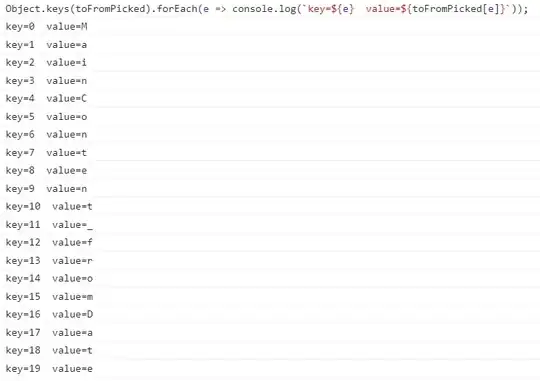

However I am have been unable to see any improvement in performance during the dataframe conversion. All the columns are strings to ensure they are compatible with the PyArrow. In the examples below the time taken is always between 1.4 and 1.5 minutes:

I have seen example where the change in processing time reduced from seconds to milliseconds [3]. I would like to know what I am doing wrong and how to optimize the code further. Thank you.