I have this convolutional autoencoder:

inputs = layers.Input(shape=(384, 128, 3))

# coder

x = layers.Conv2D(8, (3, 3), activation=layers.LeakyReLU(alpha=0.1), padding="same")(inputs)

x = layers.MaxPooling2D((2, 2), padding="same")(x)

x = layers.Conv2D(16, (3, 3), activation=layers.LeakyReLU(alpha=0.1), padding="same")(x)

x = layers.MaxPooling2D((2, 2), padding="same")(x)

x = layers.Conv2D(32, (3, 3), activation=layers.LeakyReLU(alpha=0.1), padding="same")(x)

x = layers.MaxPooling2D((2, 2), padding="same")(x)

x = layers.Conv2D(64, (3, 3), activation=layers.LeakyReLU(alpha=0.1), padding="same")(x)

x = layers.MaxPooling2D((2, 2), padding="same")(x)

# decoder

x = layers.Conv2DTranspose(64, (3, 3), strides=2, activation=layers.LeakyReLU(alpha=0.1), padding="same")(x)

x = layers.Conv2DTranspose(32, (3, 3), strides=2, activation=layers.LeakyReLU(alpha=0.1), padding="same")(x)

x = layers.Conv2DTranspose(16, (3, 3), strides=2, activation=layers.LeakyReLU(alpha=0.1), padding="same")(x)

x = layers.Conv2DTranspose(8, (3, 3), strides=2, activation=layers.LeakyReLU(alpha=0.1), padding="same")(x)

x = layers.Conv2D(3, (3, 3), activation="sigmoid", padding="same")(x)

# autoencoder

autoencoder = Model(inputs, x)

autoencoder.compile(optimizer="adam", loss="binary_crossentropy", metrics=['accuracy'])

autoencoder.summary()

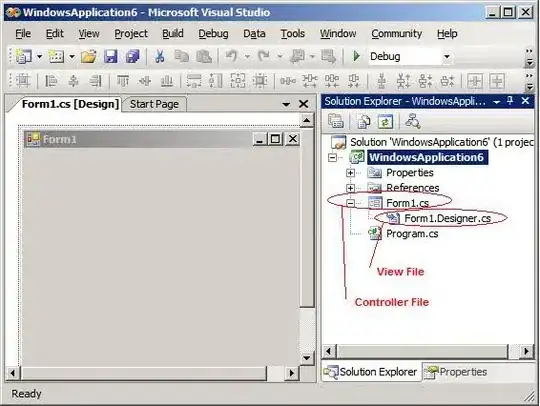

I have following image:

I am trying to overfit my model only on this image, but I cannot get loss lower than ~0,42 and accuracy is somewhere around ~0,79. Reconstruction is pretty decent (I mean, image looks more or less the same, but blurry, without details) but loss doesn't fall neither accuracy rises. I tried a few things (data preprocessing, augmentation, using leaky ReLU instead of normal ReLU), except using larger model (training would take a long time, and I am not sure if this is solution).

How can I overfit this model and increase accuracy and lower my loss? Is this image too much for this model (I am still missing intuition on how large models should be for specific type of data)?