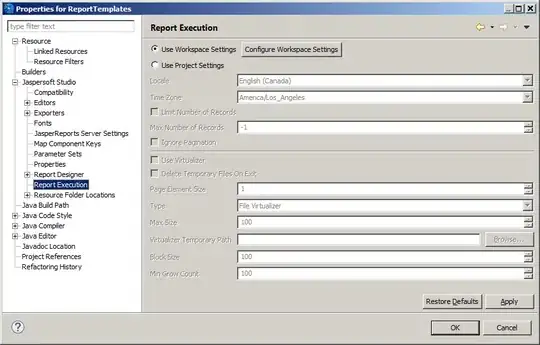

I want to calibrate a camera in order to make real world measurements of distance between features in an image. I'm using the OpenCV demo images for this example. I'd like to know if what I'm doing is valid and/or if there is another/better way to go about it. I could use a checkerboard of known pitch to calibrate my camera. But in this example the pose of the camera is as shown in the image below. So I can't just calculate px/mm to make my real world distance measurements, I need to correct for the pose first.

I find the chessboard corners and calibrate the camera with a call to OpenCV's calibrateCamera which gives the intrinsics, extrinsics and distortion parameters.

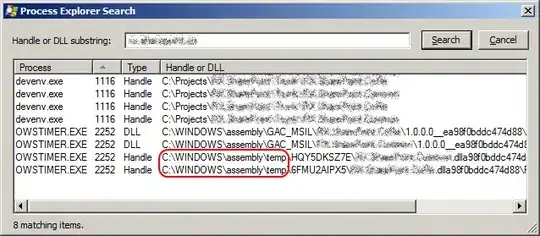

Now, I want to be able to make measurements with the camera in this same pose. As an example I'll try and measure between two corners on the image above. I want to do a perspective correction on the image so I can effectively get a birds eye view. I do this using the method described here. The idea is that the rotation for camera pose that gets a birds eye view of this image is a rotation on the z-axis. Below is the rotation matrix.

Then following this OpenCV homography example I calculate the homography between the original view and my desired bird's eye view. If I do this on the image above I get an image that looks good, see below. Now that I have my perspective rectified image I find the corners again with findChessboardCorners and I calculate an average pixel distance between corners of ~36 pixels. If the distance between corners in rw units is 25mm I can say my px/mm scaling is 36/25 = 1.44 px/mm. Now for any image taken with the camera in this pose I can rectify the image and use this pixel scaling to measure distance between objects in the image. Is there a better way to allow for real world distance measurements here? Is it possible to do this perspective correction for pixels only? For example if I find the pixel locations of two corners in the original image can I apply the image rectification on the pixel coordinates only? Rather than on the entire image which can be computationally expensive? Just trying to deepen my understanding. Thanks

Some of my code

void MyCalibrateCamera(float squareSize, const std::string& imgPath, const Size& patternSize)

{

Mat img = imread(samples::findFile(imgPath));

Mat img_corners = img.clone(), img_pose = img.clone();

//! [find-chessboard-corners]

vector<Point2f> corners;

bool found = findChessboardCorners(img, patternSize, corners);

//! [find-chessboard-corners]

if (!found)

{

cout << "Cannot find chessboard corners." << endl;

return;

}

drawChessboardCorners(img_corners, patternSize, corners, found);

imshow("Chessboard corners detection", img_corners);

waitKey();

//! [compute-object-points]

vector<Point3f> objectPoints;

calcChessboardCorners(patternSize, squareSize, objectPoints);

vector<Point2f> objectPointsPlanar;

vector <vector <Point3f>> objectPointsArray;

vector <vector <Point2f>> imagePointsArray;

imagePointsArray.push_back(corners);

objectPointsArray.push_back(objectPoints);

Mat intrinsics;

Mat distortion;

vector <Mat> rotation;

vector <Mat> translation;

double RMSError = calibrateCamera(

objectPointsArray,

imagePointsArray,

img.size(),

intrinsics,

distortion,

rotation,

translation,

CALIB_ZERO_TANGENT_DIST |

CALIB_FIX_K3 | CALIB_FIX_K4 | CALIB_FIX_K5 |

CALIB_FIX_ASPECT_RATIO);

cout << "intrinsics: " << intrinsics << endl;

cout << "rotation: " << rotation.at(0) << endl;

cout << "translation: " << translation.at(0) << endl;

cout << "distortion: " << distortion << endl;

drawFrameAxes(img_pose, intrinsics, distortion, rotation.at(0), translation.at(0), 2 * squareSize);

imshow("FrameAxes", img_pose);

waitKey();

//todo: is this a valid px/mm measure?

float px_to_mm = intrinsics.at<double>(0, 0) / (translation.at(0).at<double>(2,0) * 1000);

//undistort the image

Mat imgUndistorted, map1, map2;

initUndistortRectifyMap(

intrinsics,

distortion,

Mat(),

getOptimalNewCameraMatrix(

intrinsics,

distortion,

img.size(),

1,

img.size(),

0),

img.size(),

CV_16SC2,

map1,

map2);

remap(

img,

imgUndistorted,

map1,

map2,

INTER_LINEAR);

imshow("OrgImg", img);

waitKey();

imshow("UndistortedImg", imgUndistorted);

waitKey();

Mat img_bird_eye_view = img.clone();

//rectify

// https://docs.opencv.org/3.4.0/d9/dab/tutorial_homography.html#tutorial_homography_Demo3

// https://stackoverflow.com/questions/48576087/birds-eye-view-perspective-transformation-from-camera-calibration-opencv-python

//Get to a birds eye view, or -90 degrees z rotation

Mat rvec = rotation.at(0);

Mat tvec = translation.at(0);

//-90 degrees z. Required depends on the frame axes.

Mat R_desired = (Mat_<double>(3, 3) <<

0, 1, 0,

-1, 0, 0,

0, 0, 1);

//Get 3x3 rotation matrix from rotation vector

Mat R;

Rodrigues(rvec, R);

//compute the normal to the camera frame

Mat normal = (Mat_<double>(3, 1) << 0, 0, 1);

Mat normal1 = R * normal;

//compute d, distance . dot product between the normal and a point on the plane.

Mat origin(3, 1, CV_64F, Scalar(0));

Mat origin1 = R * origin + tvec;

double d_inv1 = 1.0 / normal1.dot(origin1);

//compute the homography to go from the camera view to the desired view

Mat R_1to2, tvec_1to2;

Mat tvec_desired = tvec.clone();

//get the displacement between our views

computeC2MC1(R, tvec, R_desired, tvec_desired, R_1to2, tvec_1to2);

//now calculate the euclidean homography

Mat H = R_1to2 + d_inv1 * tvec_1to2 * normal1.t();

//now the projective homography

H = intrinsics * H * intrinsics.inv();

H = H / H.at<double>(2, 2);

std::cout << "H:\n" << H << std::endl;

Mat imgToWarp = imgUndistorted.clone();

warpPerspective(imgToWarp, img_bird_eye_view, H, img.size());

Mat compare;

hconcat(imgToWarp, img_bird_eye_view, compare);

imshow("Bird eye view", compare);

waitKey();

...