I am new to Python. I am trying to practice basic regularization by following along with a DataCamp exercise using this CSV: https://assets.datacamp.com/production/repositories/628/datasets/a7e65287ebb197b1267b5042955f27502ec65f31/gm_2008_region.csv

# Import numpy and pandas

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

# Read the CSV file into a DataFrame: df

df = pd.read_csv('gm_2008_region.csv')

# Create arrays for features and target variable

X = df.drop(['life','Region'], axis=1)

y = df['life'].values.reshape(-1,1)

df_columns = df.drop(['life','Region'], axis=1).columns

The code that I use for the DataCamp exercise is as follows:

# Import Lasso

from sklearn.linear_model import Lasso

# Instantiate a lasso regressor: lasso

lasso = Lasso(alpha=0.4, normalize=True)

# Fit the regressor to the data

lasso.fit(X, y)

# Compute and print the coefficients

lasso_coef = lasso.coef_

print(lasso_coef)

# Plot the coefficients

plt.plot(range(len(df_columns)), lasso_coef)

plt.xticks(range(len(df_columns)), df_columns.values, rotation=60)

plt.margins(0.02)

plt.show()

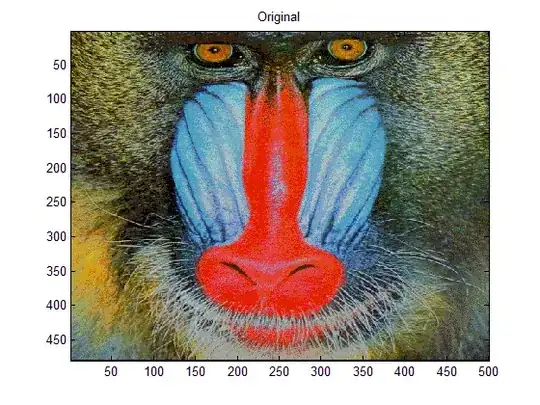

I get the output above, indicating that child_mortality is the most important feature in predicting life expectancy, but this code also results in a deprecation warning due to the use of "normalize."

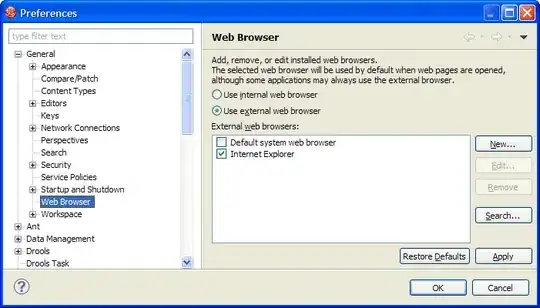

I'd like to update this code using the current best practice. I have tried the following, but I get a different output. I am hoping someone can help identify what I need to modify in the updated code in order to produce the same output.

# Modified based on https://scikit-learn.org/stable/modules/preprocessing.html#preprocessing-scaler

# and https://stackoverflow.com/questions/28822756/getting-model-attributes-from-pipeline

# Import Lasso

from sklearn.linear_model import Lasso

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

# Instantiate a lasso regressor: lasso

#lasso = Lasso(alpha=0.4, normalize=True)

pipe = Pipeline(steps=[

('scaler',StandardScaler()),

('lasso',Lasso(alpha=0.4))

])

# Fit the regressor to the data

#lasso.fit(X, y)

pipe.fit(X, y)

# Compute and print the coefficients

#lasso_coef = lasso.coef_

#print(lasso_coef)

lasso_coef = pipe.named_steps['lasso'].coef_

print(lasso_coef)

# Plot the coefficients

plt.plot(range(len(df_columns)), lasso_coef)

plt.xticks(range(len(df_columns)), df_columns.values, rotation=60)

plt.margins(0.02)

plt.show()

As you can see, I draw the same conclusion, but I'd be more comfortable that I was doing this correctly if the output images were more similar. What am I doing wrong with the Pipeline?