I am using Hive, and the IDE is Hue. I am trying different key combinations to choose for my partition key(s).

The definition of my original table is as follows:

CREATE External Table `my_hive_db`.`my_table`(

`col_id` bigint,

`result_section__col2` string,

`result_section_col3` string ,

`result_section_col4` string,

`result_section_col5` string,

`result_section_col6__label` string,

`result_section_col7__label_id` bigint ,

`result_section_text` string ,

`result_section_unit` string,

`result_section_col` string ,

`result_section_title` string,

`result_section_title_id` bigint,

`col13` string,

`timestamp` bigint,

`date_day` string

)

PARTITIONED BY (

`date_year` string,

`date_month` string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'

STORED AS INPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'

LOCATION

's3a://some/where/in/amazon/s3';

The above code is working properly. But when I create a new table with date_day as partition key, the table is empty and I need to run MSCK Repair Table. However, I am getting the following error:

Error while compiling statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.ddl.DDLTask

When the partion keys were date_year, date_month, MSCK worked properly.

Table definition of the table I am getting the error for is as follows:

CREATE External Table `my_hive_db`.`my_table`(

`col_id` bigint,

`result_section__col2` string,

`result_section_col3` string ,

`result_section_col4` string,

`result_section_col5` string,

`result_section_col6__label` string,

`result_section_col7__label_id` bigint ,

`result_section_text` string ,

`result_section_unit` string,

`result_section_col` string ,

`result_section_title` string,

`result_section_title_id` bigint,

`col13` string,

`timestamp` bigint,

`date_year` string,

`date_month` string

)

PARTITIONED BY (

`date_day` string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'

STORED AS INPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'

LOCATION

's3a://some/where/in/amazon/s3';

After this the following query is empty:

Select * From `my_hive_db`.`my_table` Limit 10;

I therefore ran the following command:

MSCK REPAIR TABLE `my_hive_db`.`my_table`;

And I get the error: Error while compiling statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.ddl.DDLTask

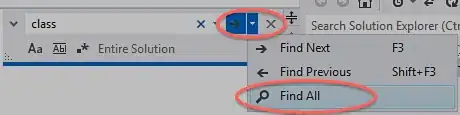

I checked this link as it is exactly the error I am getting, but by using the solution provided:

set hive.msck.path.validation=ignore;

MSCK REPAIR TABLE table_name;

I get a different error:

Error while processing statement: Cannot modify hive.msck.path.validation at runtime. It is not in list of params that are allowed to be modified at runtime.

I think the reason I am getting these errors is that there are more than 200 million records with date_day having null value.

There are 31 distinct date-day not null values. I would like to partition my table with 32 partitions, each for a distinct value of date_day field, and all the null values get into a different partition. Is there a way to do so (partitioning by a column with null values)?

If this can be achieved by spark, I am also open to use it.

This is part of a bigger problem of changing partition keys by recreating a table as mentioned in this link in answer to my other question.

Thank you for your help.