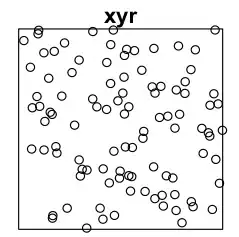

For a university project I need to compare two images I have taken and find the differences between them.

To be precise I monitor a 3d printing process where I take a picture after each printed layer. Afterwards I need to find the outlines of the newly printed part. The pictures look like this (left layer X, right layer X+1):

I have managed to extract the layer differences with the structural similarity from scikit from this question. Resulting in this image:

The recognized differences match the printed layer nearly 1:1 and seem to be a good starting point to draw the contours. However this is where I am currently stuck. I have tried several combinations of tresholding, blurring, findContours, sobel and canny operations but I am unable to produce an accurate outline of the newly printed layer.

Edit:

This is what I am looking for:

Edit2: I have uploaded the images in the original file size and format here:

Layer X Layer X+1 Difference between the layers

Are there any operations that I haven't tried yet/do not know about? Or is there a combination of operations that could help in my case?

Any help on how to solve this problem would be greatly appreciated!!