I am profiling a test code presented in the Unified Memory for CUDA Beginners on NVIDIA's developer forum.

Code:

#include <iostream>

#include <math.h>

// CUDA kernel to add elements of two arrays

__global__

void add(int n, float* x, float* y)

{

int index = blockIdx.x * blockDim.x + threadIdx.x;

int stride = blockDim.x * gridDim.x;

for (int i = index; i < n; i += stride)

y[i] = x[i] + y[i];

}

int main(void)

{

int N = 1 << 20;

float* x, * y;

// Allocate Unified Memory -- accessible from CPU or GPU

cudaMallocManaged(&x, N * sizeof(float));

cudaMallocManaged(&y, N * sizeof(float));

// initialize x and y arrays on the host

for (int i = 0; i < N; i++) {

x[i] = 1.0f;

y[i] = 2.0f;

}

// Launch kernel on 1M elements on the GPU

int blockSize = 256;

int numBlocks = (N + blockSize - 1) / blockSize;

add << <numBlocks, blockSize >> > (N, x, y);

// Wait for GPU to finish before accessing on host

cudaDeviceSynchronize();

// Check for errors (all values should be 3.0f)

float maxError = 0.0f;

for (int i = 0; i < N; i++)

maxError = fmax(maxError, fabs(y[i] - 3.0f));

std::cout << "Max error: " << maxError << std::endl;

// Free memory

cudaFree(x);

cudaFree(y);

return 0;

}

QUESTION: The results of the profiling presented by the author shows information about "Page Faults" but when I run the nvprof and nvvp profilers, I do not get any information about page faults. Is there any flag or something that needs to be explicitly set to get that information?

My nvprof output:

== 20160 == Profiling result :

Type Time(%) Time Calls Avg Min Max Name

GPU activities : 100.00 % 60.513us 1 60.513us 60.513us 60.513us add(int, float*, float*)

API calls : 81.81 % 348.14ms 2 174.07ms 1.5933ms 346.54ms cudaMallocManaged

16.10 % 68.511ms 1 68.511ms 68.511ms 68.511ms cuDevicePrimaryCtxRelease

1.34 % 5.7002ms 1 5.7002ms 5.7002ms 5.7002ms cudaLaunchKernel

0.66 % 2.8192ms 2 1.4096ms 1.0669ms 1.7523ms cudaFree

0.07 % 277.80us 1 277.80us 277.80us 277.80us cudaDeviceSynchronize

0.01 % 33.500us 3 11.166us 3.5000us 16.400us cuModuleUnload

0.00 % 19.800us 1 19.800us 19.800us 19.800us cuDeviceTotalMem

0.00 % 16.700us 101 165ns 100ns 900ns cuDeviceGetAttribute

0.00 % 9.2000us 3 3.0660us 200ns 8.2000us cuDeviceGetCount

0.00 % 3.1000us 1 3.1000us 3.1000us 3.1000us cuDeviceGetName

0.00 % 2.1000us 2 1.0500us 300ns 1.8000us cuDeviceGet

0.00 % 300ns 1 300ns 300ns 300ns cuDeviceGetLuid

0.00 % 200ns 1 200ns 200ns 200ns cuDeviceGetUuid

== 20160 == Unified Memory profiling result :

Device "GeForce GTX 1070 (0)"

Count Avg Size Min Size Max Size Total Size Total Time Name

64 128.00KB 128.00KB 128.00KB 8.000000MB 3.217900ms Host To Device

146 84.164KB 32.000KB 1.0000MB 12.00000MB 68.17800ms Device To Host

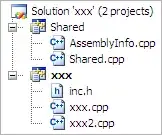

My nvvp Profiling Result: