I am trying to extract userid, rating and review from the following site using selenium and it is showing "Invalid selector error". I think, the Xpath I have tried to define to get the review text is the reason for error. But I am unable to resolve the issue. The site link is as below:

The code that I have used is following:

#Class for Review webscraping from consumeraffairs.com site

class CarForumCrawler():

def __init__(self, start_link):

self.link_to_explore = start_link

self.comments = pd.DataFrame(columns = ['rating','user_id','comments'])

self.driver = webdriver.Chrome(executable_path=r'C:/Users/mumid/Downloads/chromedriver/chromedriver.exe')

self.driver.get(self.link_to_explore)

self.driver.implicitly_wait(5)

self.extract_data()

self.save_data_to_file()

def extract_data(self):

ids = self.driver.find_elements_by_xpath("//*[contains(@id,'review-')]")

comment_ids = []

for i in ids:

comment_ids.append(i.get_attribute('id'))

for x in comment_ids:

#Extract dates from for each user on a page

user_rating = self.driver.find_elements_by_xpath('//*[@id="' + x +'"]/div[1]/div/img')[0]

rating = user_rating.get_attribute('data-rating')

#Extract user ids from each user on a page

userid_element = self.driver.find_elements_by_xpath('//*[@id="' + x +'"]/div[2]/div[2]/strong')[0]

userid = userid_element.get_attribute('itemprop')

#Extract Message for each user on a page

user_message = self.driver.find_elements_by_xpath('//*[@id="' + x +'"]]/div[3]/p[2]/text()')[0]

comment = user_message.text

#Adding date, userid and comment for each user in a dataframe

self.comments.loc[len(self.comments)] = [rating,userid,comment]

def save_data_to_file(self):

#we save the dataframe content to a CSV file

self.comments.to_csv ('Tesla_rating-6.csv', index = None, header=True)

def close_spider(self):

#end the session

self.driver.quit()

try:

url = 'https://www.consumeraffairs.com/automotive/tesla_motors.html'

mycrawler = CarForumCrawler(url)

mycrawler.close_spider()

except:

raise

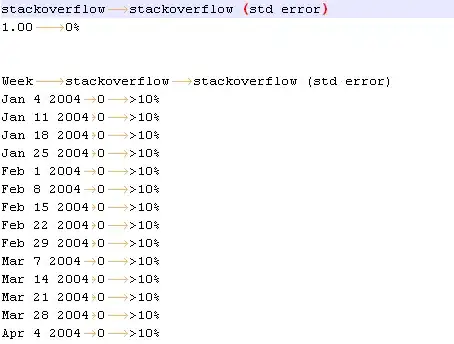

The error that I am getting is as following:

Also, The xpath that I tried to trace is from following HTML