Scenario

I'm using unity c# to re-invent a google-earth like experience as a project. New tiles are asynchronously loaded in from the web while a user pans the camera around the globe. So far I'm able to load in all the TMS tiles based on their x & y coordinates and zoom level. Currently I'm using tile x,y to try and figure out where the tile should appear on my earth "sphere" and it's becoming quite tedious, I assume because of the differences between Euler angles and quaternions.

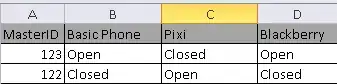

- I'm using the angle of

Camera.mainto figure out which tiles should be viewed at any moment (seems to be working fine) - I have to load / unload tiles for memory management as level 10 can receive over 1 million 512x512 tiles

- I'm trying to turn a downloaded tile's x,y coordinates (2d) into a 3d position & rotation

Question

Using just the TMS coordinates of my tile (0,0 - 63,63) how can I calculate the tile's xyz "earth" position as well as its xyz rotation?

Extra

- in the attached screenshot I'm at zoom level 4 (64 tiles)

- y axis 0 is the bottom of the globe while y axis 15 is the top

- I'm mostly using

Mathf.SinandMathf.Costo figure out position & rotation so far

** EDIT **

I've figured out how to get the tile position correct. Now I'm stuck on the correct rotation of the tiles.

The code that helped me the most was found with a question about generating a sphere in python.

I modified to the code to look like so:

// convenience helpers @jkr

float ti = tilesInfo["tilesXY"]; // basically the amount of tiles across either axis @jkr

float ti2 = ti / 2;

float pi = Mathf.PI;

float pi2 = pi / 2;

float pipi = pi * 2;

// position for 3d tiles @jkr

float phi = keyY / ti * pi;

float theta = keyX / ti * pipi;

x = Mathf.Sin(phi) * Mathf.Cos(theta) * ER;

y = Mathf.Sin(phi) * Mathf.Sin(theta) * ER;

z = Mathf.Cos(phi) * ER;

** EDIT 2 **

after adding @Ruzihm's answer to compute normals

** EDIT 3 **

after adding @Ruzihm's shader. I went on to make a number of tweaks to get things more situated and there's still a ways to go but at least this is big progress.