We have made an architecture change and now each streaming message is duplicated 5 time on average. Like 5 more messages is a lot and is impacting performance.

- Before we were directly connecting to the capture blob storage of a third party event hub (this event hub had 32 partitions).

- Now we have an azure function connected to a third party event hub. This azure function is pushing the message in our event hub . And we are using the capture from our event hub . Our event hub has only 3 partitions (we followed the microsoft recommendation for partition number)

I am aware that the topic of duplicates and event hub duplicates has been discussed extensively (see links below). And i am still puzzled by the number of duplicates i am getting. Is it expected that in , each message could be duplicated 5 time in average?

Our Throughput Unit is 1 , auto inflate 3. Partition number is 3.

Function code is below :

using Microsoft.Azure.EventHubs;

using Microsoft.Azure.WebJobs;

using SendGrid.Helpers.Mail;

using System.Threading.Tasks;

using Microsoft.Extensions.Logging;

namespace INGESTION

{

public static class InvoiceMasterData

{

[FunctionName("InvoiceMasterData")]

public static async Task Run([EventHubTrigger("InvoiceMasterData", Connection = "SAP_InvoiceMasterData")] EventData[] events,

[EventHub("InvoiceMasterData", Connection = "Azure_InvoiceMasterData")] IAsyncCollector<EventData> outputEvents,

[SendGrid(ApiKey = "AzureSendGridKey")] IAsyncCollector<SendGridMessage> messageCollector, ILogger log)

{

var genericFunctionStopper = new GenericFunctionStopper();

await genericFunctionStopper.Loaddata(outputEvents, "InvoiceMasterData", messageCollector, log, events);

}

}

}

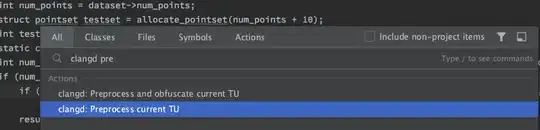

Sharing the event hub metrics below :

We also observe a 2nd unexpected behaviour, that i would like to share (maybe i should ask in another question).

Before, with the old architecture, we never had the same EnqueuedTimeUtc for each invoice primary key. Now with the new architecture, using the function, it happens all the time. This is a problem because we were using the EnqueudTimeUtc to deduplicate. Is it because somehow we are treating the messages in batch? Is it because our partition number is less ?

Any suggestion , observation, expertise would be much appreciated !

https://learn.microsoft.com/en-us/azure/azure-functions/functions-reliable-event-processing

Azure functions with Event Hub trigger writes duplicate messages

Azure Functions Event Hub trigger bindings

https://github.com/Azure/azure-event-hubs-dotnet/issues/358