I am working with the perspective transformation using opencv on python. So I have found the homography matrix between two sets of points using SIFT detector. For now, I would like to transform an image using the cv2.warperspective function but it turns out the quality of the warped image is very low.

I did some google search and found this, Image loses quality with cv2.warpPerspective. So by using the method from the accepted answer which try with different interpolation flags for the warperspective but it didn't help. Please do suggest what to do to overcome the issue. Thanks in advance.

Code:

import cv2

import numpy as np

import matplotlib.pyplot as plt

import time

import copy

def main():

picture = cv2.imread("src/paper.png")

cap = cv2.VideoCapture("src/video0.mp4")

query = cv2.imread("src/qr.png")

while True:

_, frame = cap.read()

h,w = frame.shape[:2]

rgb = cv2.cvtColor(query, cv2.COLOR_BGR2RGB)

paper = cv2.resize(paper, (query.shape[1], query.shape[0]), interpolation = cv2.INTER_AREA)

# declare sift object detector

sift = cv2.SIFT_create()

# point and object detection

query_kp, query_des = sift.detectAndCompute(rgb,None)

train_kp, train_des = sift.detectAndCompute(frame.astype(np.uint8),None)

match_points = []

bf = cv2.BFMatcher(cv2.NORM_L2,crossCheck=False)

match = bf.knnMatch(query_des,train_des,k=2)

# ratio test

ratio = 0.8

for ptn1,ptn2 in match:

if ptn1.distance < ratio * ptn2.distance:

match_points.append(ptn1)

# at least 4 points for projection

if len(match_points) >= 4:

set_point1 = np.float32([query_kp[ptn.queryIdx].pt for ptn in match_points]).reshape((-1, 1, 2))

set_point2 = np.float32([train_kp[ptn.trainIdx].pt for ptn in match_points]).reshape((-1, 1, 2))

frame = projection(frame, paper, set_point1, set_point2)

cv2.imshow("frame", frame)

cv2.waitKey(1)

def projection(frame, picture, set_point1, set_point2):

h,w = frame.shape[:2]

homography_matrix, _ = cv2.findHomography(set_point1,set_point2,cv2.RANSAC,5)

warpedImg = cv2.warpPerspective(picture, homography_matrix, (w,h), flags=cv2.WARP_FILL_OUTLIERS)

# overlay the warpedImg to original frame

x,y,z = np.where(warpedImg != [0,0,0])

frame[x,y,z] = warpedImg[x,y,z]

return frame

main()

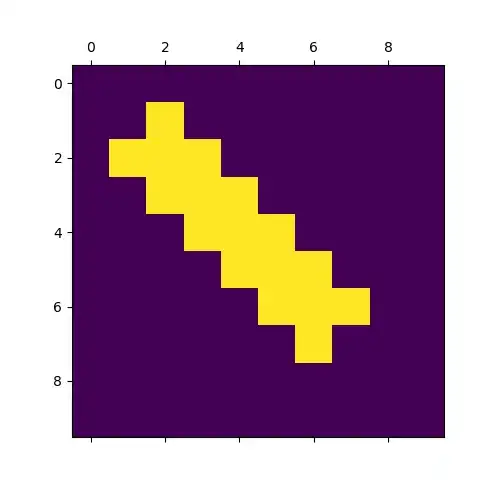

Example output - The image under the profile picture section (I understand due to the aspect ratio so it looks small but the quality is just bad and the words in the warped image are all kinda distorted):