Considering a dataframe with insect species, specified in column 'class', I would like to drop entries that have exceeded a certain threshold in order to balance against the ones that does not have many.

df_counts = df['class'].value_counts()

class_balance = df_counts.where(df_counts > threshold).notnull()

for idx, item in class_balance.iteritems():

if item:

if df_counts[idx] > threshold:

n = int(df_counts[idx] - threshold)

df_aux = df.drop(df[df['class'] == idx].sample(n=n).index)

df_counts_b = df_aux['class'].value_counts()

so, I have iterate only over the classes that have exceeded this limit: df_counts.where(df_counts > threshold).notnull(), and I would like to update my dataframe, droping the exceeded number of rows: n, randomly: sample(n=n).

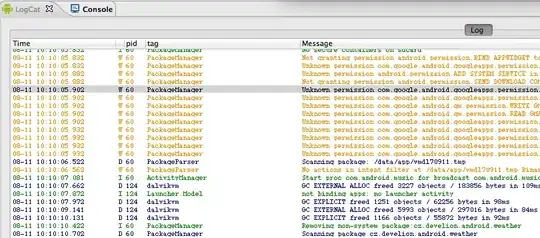

But seems it does not work in this way, like recommeded here. Note the difference between df_counts before the drop, and after first iteration:

Seems the index has been messed up. Other class have been deleted. It should be simple to delete rows conditionally, but it just behaves strange. Any clue?