I'm doing some feature detection/pattern matching based on the OpenCV example code shown at https://docs.opencv.org/3.4/d5/dde/tutorial_feature_description.html

In my example, input2 is an image with a size of 256x256 pixels and input1 is a subimage out of image2 (e.g. with an offset of 5x5 pixels and a size of 200x80 pixels).

Everything works fine: OpenCV detects a larger number of keypoints and descriptors in image2 than in image1 and after matching the two descriptors, "matches" contains exactly as much elements as we had incoming descriptors1.

Until now everything is logical and fits: the number of matches is exactly the number of keypoints/descriptors which are expected in the subimage part.

My problem with this: the elements in "matches" all have a way too back distance value! They all are bigger than 5 (my subimage offset) and most of them are bigger than 256 (the total image size)!

What could be the reason for this?

Thanks!

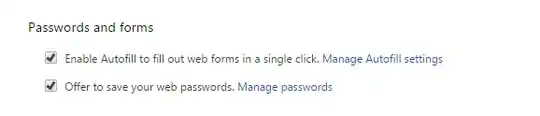

Update: here you find the image(s) I'm working with:

The whole image is my input2 (don't worry about not having it a size of 256x256 pixels, this is taken out of a screenshot which shows more things). The blue, dashed rectangle in the middle shows my input1 and the circles within this rectangle mark the already detected keypoints1.

The behavior of the code appears to differ between versions 2.x and 3.x.