I'm working on a script which reads data from a MS Excel workbook and does some plotting etc. The data read from Excel are accelerations measurements in a_x, a_y, and a_z directions and the time in s (separate numpy arrays). Accelerations are first filtered using a 5Hz low-pass filter before being plotted, see Figure 1 for a plot example, which are the permissible acceleration levels.

I need to find the amount of time the limiting curve is exceeded but the plot is related to a_z and abs(a_y) and not time. My attempted solution is to:

- Find the intersection points between the acceleration and the limiting curve.

- Find the data points (

a_zandabs(a_y)) closest to the intersection point. - Get the indices for data points closest to the intersection points.

- Use the found indices for the time array and substract the two to get the amount of time above limiting curves.

The problem is I fail a bullet 3. I've succeeded in finding intersection points between the limiting curve and my filtered data. I've also managed in to find my data points nearest the intersection points however, if I found the closest intersection point for a_z this doesn't match the index I get from abs(a_y).

Intersection points are found by:

f_l1 = LineString(np.column_stack((x, y1)))

s_l1 = LineString(np.column_stack((az3, abs(ay3))))

intersection1 = f_l1.intersection(s_l1)

az3_1,ay3_1 = LineString(intersection1).xy

So I'm using shapely.geometry imported as LineString to find the intersection points between the limiting curves (here shown for limiting curve y1) and the function s_l1(az,abs(a_y)).

To find the my data points closest to the intersection points I used the following approach:

Intersection of two graphs in Python, find the x value

The function I use to get my data point closest to the intersection point is:

def find_nearest_index(array, value):

array = np.asarray(array)

idx = np.abs(abs(array-value)).argmin()

return idx

, where the array is my filtered acceleration array and the value is either the a_z or a_y value of the intersection point.

I suspect the reason for different indices depending on a_z or abs(a_y) is because my data points are "too far" from the actual intersection coordinate, i.e. I might get a a_z coordinate, which value is close to the intersection point but the corresponding

abs(a_y) is way off. Similar issue is present for the abs(a_y) intersection point/data-point correlation. For upcoming measurements, I'll increase the sampling frequency but I doubt this will solve the matter completely.

I've attempted some different approaches without much luck and my latest idea is to try to use both intersection points when locating the nearest data point, so I check if the index I get from my find_nearest_index-function using a_z is the same as the index I get from using find_nearest_index-function for abs(a_y) but I don't know how/if that is possible. But maybe there's an obvious solution to my problem that I just don't see.

A correct solution for the accelerations would like the following, where the index of my data points match the intersection points: Desirable plot between intersection points These indices are then used for calculating the amount of time above the limiting curve by taking Delta_t=t[index2]-t[index1].

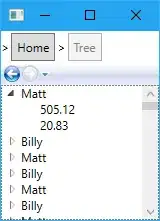

But instead I typically get something like, where the index found by using a_z is different from the index found using a_y) resulting in a wrong plot and therefore also wrong Delta_t:

Typical plot between intersection points