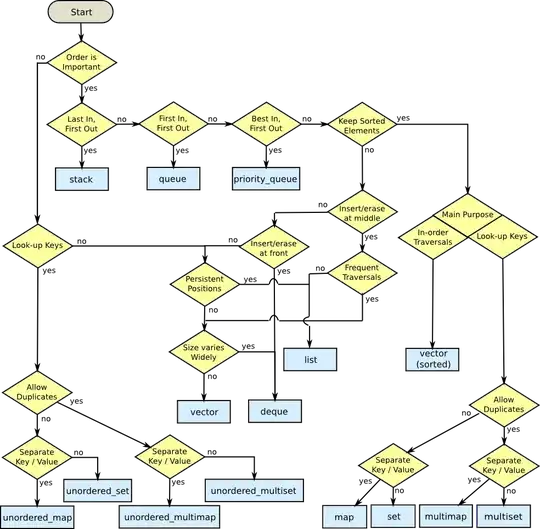

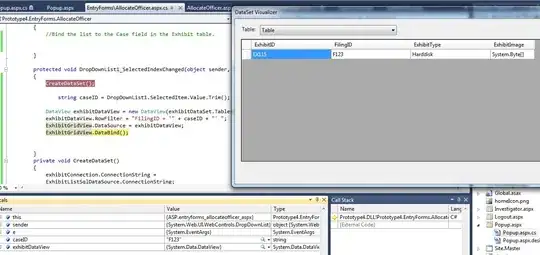

I am trying to implement the Pikachu image on the hoarding board using warpPerspective transformation. The output doesn't have smooth edges it has dotted points instead.

import cv2

import numpy as np

image = cv2.imread("base_img.jpg")

h_base, w_base = image.shape[0], image.shape[1]

white_subject = np.ones((480,640,3),dtype="uint8")*255

h_white, w_white = white_subject.shape[:2]

subject = cv2.imread('subject.jpg')

h_sub, w_sub = subject.shape[:2]

pts2 = np.float32([[109,186],[455,67],[480,248],[90,349]])

pts3 = np.float32([[0, 0], [w_white, 0], [w_white, h_white], [0, h_white]])

transformation_matrix_white = cv2.getPerspectiveTransform(pts3, pts2)

mask = cv2.warpPerspective(white_subject, transformation_matrix_white, (w_base, h_base))

image[mask==255] = 0

pts3 = np.float32([[0, 0], [w_sub, 0], [w_sub, h_sub], [0, h_sub]])

transformation_matrix = cv2.getPerspectiveTransform(pts3, pts2)

warped_image = cv2.warpPerspective(subject, transformation_matrix, (w_base, h_base))

Hoarding board image

Pikachu Image

Output Image

Pattern Image

Please help me out in getting the output without the dotted point at the edges.