I am trying to convert the for loop into any apply family for code optimization

Here is the sample data

my_data = structure(list(Sector = c("AAA", "BBB", "AAA", "CCC", "AAA",

"BBB", "AAA", "CCC"), Sub_Sector = c("AAA1", "BBB1", "AAA1",

"CCC1", "AAA1", "BBB2", "AAA1", "CCC2"), count = c(1L, 1L, 1L,

1L, 1L, 1L, 1L, 1L), type = c("Actual", "Actual", "Actual", "Actual",

"Actual", "Actual", "Actual", "Actual")), class = "data.frame", row.names = c(NA,

-8L))

Actual Function (Using for loop) this function gives us the expected output

expand_collapse_compliance <- function(right_table){

sector_list <- unique(right_table$Sector)

df = data.frame("Sector1"=c(""),"Sector"=c(""),"Sub_Sector"=c(""),"Actual"=c(""))

for(s in sector_list){

df1 = right_table[right_table$Sector==s,]

sector1 = df1$Sector[1]

Sector = df1$Sector[1]

Sub_Sector = ""

actual = as.character(nrow(df1))

mainrow = c(sector1,Sector,Sub_Sector,actual)

df = rbind(df,mainrow)

Sub_Sector_list <- unique(df1$Sub_Sector)

for(i in Sub_Sector_list){

df2 = right_table[right_table$Sub_Sector==i,]

sector1 = df1$Sector[1]

Sector = ""

Sub_Sector = df2$Sub_Sector[1]

actual = nrow(df2)

subrow = c(sector1,Sector,Sub_Sector,actual)

df = rbind(df,subrow)

}

}

df = df[2:nrow(df),]

df$Actual = as.numeric(df$Actual)

df_total = nrow(right_table)

df = rbind(df,c("","Total","",df_total))

return(df)

}

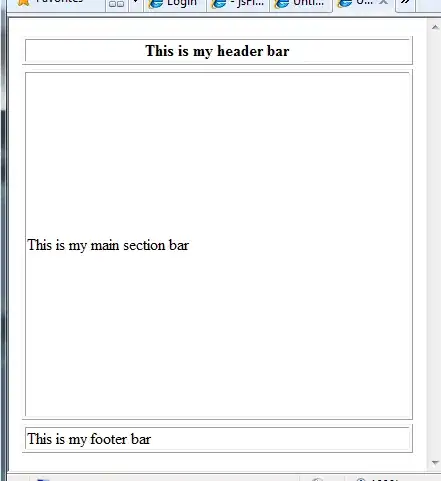

DT::datatable(expand_collapse_compliance(mydata1),

rownames = F,escape = FALSE,

selection=list(mode="single",target="row"),

options = list(pageLength = 50,scrollX = TRUE,

dom = 'tp',ordering=F,

columnDefs = list(list(visible=FALSE, targets=0),

list(className = 'dt-left', targets = '_all'))),class='hover cell-border stripe')

i tried to convert inner loop to lapply first while doing that the sub_sector value is not showing in the output table, please let me know how to fix any idea would be appreciated

expand_collapse_compliance <- function(right_table){

sector_list <- unique(right_table$Sector)

df = data.frame("Sector1"=c(""),"Sector"=c(""),"Sub_Sector"=c(""),"Actual"=c(""))

for(s in sector_list){

df1 = right_table[right_table$Sector==s,]

sector1 = df1$Sector[1]

Sector = df1$Sector[1]

Sub_Sector = ""

actual = as.character(nrow(df1))

mainrow = c(sector1,Sector,Sub_Sector,actual)

df = rbind(df,mainrow)

Sub_Sector_list <- unique(df1$Sub_Sector)

#for(i in Sub_Sector_list){

lapply(Sub_Sector_list, function(x){

df2 = right_table[right_table$Sub_Sector==Sub_Sector_list,]

sector1 = df1$Sector[1]

Sector = ""

Sub_Sector = df2$Sub_Sector[1]

actual = nrow(df2)

subrow = c(sector1,Sector,Sub_Sector,actual)

df = rbind(df,subrow)

})

}

df = df[2:nrow(df),]

df$Actual = as.numeric(df$Actual)

df_total = nrow(right_table)

df = rbind(df,c("","Total","",df_total))

return(df)

}