I am currently trying to move my calico based clusters to the new Dataplane V2, which is basically a managed Cilium offering. For local testing, I am running k3d with open source cilium installed, and created a set of NetworkPolicies (k8s native ones, not CiliumPolicies), which lock down the desired namespaces.

My current issue is, that when porting the same Policies on a GKE cluster (with DataPlane enabled), those same policies don't work.

As an example let's take a look into the connection between some app and a database:

---

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: db-server.db-client

namespace: BAR

spec:

podSelector:

matchLabels:

policy.ory.sh/db: server

policyTypes:

- Ingress

ingress:

- ports: []

from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: FOO

podSelector:

matchLabels:

policy.ory.sh/db: client

---

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: db-client.db-server

namespace: FOO

spec:

podSelector:

matchLabels:

policy.ory.sh/db: client

policyTypes:

- Egress

egress:

- ports:

- port: 26257

protocol: TCP

to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: BAR

podSelector:

matchLabels:

policy.ory.sh/db: server

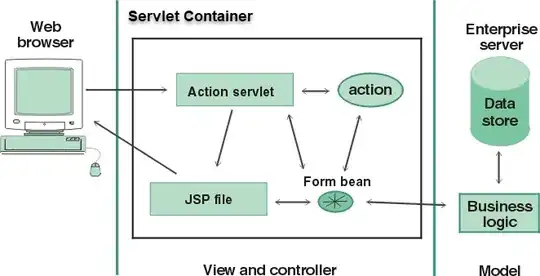

Moreover, using GCP monitoring tools we can see the expected and actual effect the policies have on connectivity:

And logs from the application trying to connect to the DB, and getting denied:

{

"insertId": "FOO",

"jsonPayload": {

"count": 3,

"connection": {

"dest_port": 26257,

"src_port": 44506,

"dest_ip": "172.19.0.19",

"src_ip": "172.19.1.85",

"protocol": "tcp",

"direction": "egress"

},

"disposition": "deny",

"node_name": "FOO",

"src": {

"pod_name": "backoffice-automigrate-hwmhv",

"workload_kind": "Job",

"pod_namespace": "FOO",

"namespace": "FOO",

"workload_name": "backoffice-automigrate"

},

"dest": {

"namespace": "FOO",

"pod_namespace": "FOO",

"pod_name": "cockroachdb-0"

}

},

"resource": {

"type": "k8s_node",

"labels": {

"project_id": "FOO",

"node_name": "FOO",

"location": "FOO",

"cluster_name": "FOO"

}

},

"timestamp": "FOO",

"logName": "projects/FOO/logs/policy-action",

"receiveTimestamp": "FOO"

}

EDIT:

My local env is a k3d cluster created via:

k3d cluster create --image ${K3SIMAGE} --registry-use k3d-localhost -p "9090:30080@server:0" \

-p "9091:30443@server:0" foobar \

--k3s-arg=--kube-apiserver-arg="enable-admission-plugins=PodSecurityPolicy,NodeRestriction,ServiceAccount@server:0" \

--k3s-arg="--disable=traefik@server:0" \

--k3s-arg="--disable-network-policy@server:0" \

--k3s-arg="--flannel-backend=none@server:0" \

--k3s-arg=feature-gates="NamespaceDefaultLabelName=true@server:0"

docker exec k3d-server-0 sh -c "mount bpffs /sys/fs/bpf -t bpf && mount --make-shared /sys/fs/bpf"

kubectl taint nodes k3d-ory-cloud-server-0 node.cilium.io/agent-not-ready=true:NoSchedule --overwrite=true

skaffold run --cache-artifacts=true -p cilium --skip-tests=true --status-check=false

docker exec k3d-server-0 sh -c "mount --make-shared /run/cilium/cgroupv2"

Where cilium itself is being installed by skaffold, via helm with the following parameters:

name: cilium

remoteChart: cilium/cilium

namespace: kube-system

version: 1.11.0

upgradeOnChange: true

wait: false

setValues:

externalIPs.enabled: true

nodePort.enabled: true

hostPort.enabled: true

hubble.relay.enabled: true

hubble.ui.enabled: true

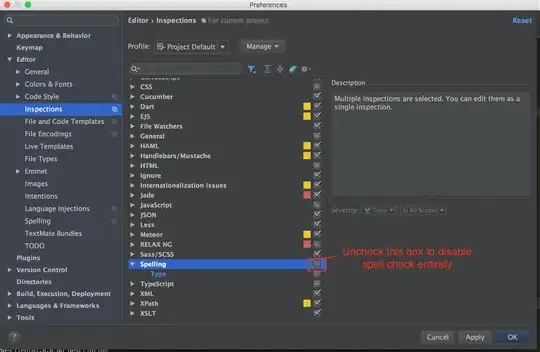

UPDATE: I have setup a third environment: a GKE cluster using the old calico CNI (Legacy dataplane) and installed cilium manually as shown here. Cilium is working fine, even hubble is working out of the box (unlike with the dataplane v2...) and I found something interesting. The rules behave the same as with the GKE managed cilium, but with hubble working I was able to see this:

For some reason cilium/hubble cannot identify the db pod and decipher its labels. And since the labels don't work, the policies that rely on those labels, also don't work.

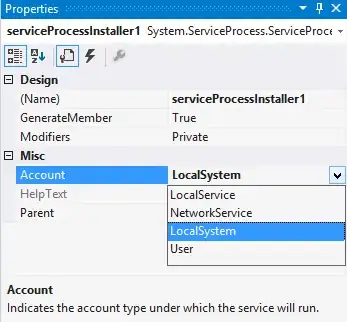

Another proof of this would be the trace log from hubble:

Here the destination app is only identified via an IP, and not labels.

The question now is why is this happening?

Any idea how to debug this problem? What could be difference coming from? Do the policies need some tuning for the managed Cilium, or is a bug in GKE? Any help/feedback/suggestion appreciated!