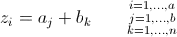

I have data that looks like this:

I want to be able to see how often each seller in chosen within each country. I have done it the long and slow way like this:

competitor_by_country <- df %>%

group_by(country) %>%

summarise(

Test_count = sum(!is.na(Test)),

Test2_count = sum(!is.na(Test2)),

Shopify_count = sum(!is.na(Shopify_)),

Aliexpress_count = sum(!is.na(Aliexpress)),

JD_count = sum(!is.na(JD)),

Flipkart_count = sum(!is.na(Flipkart_)),

Rakuten_count = sum(!is.na(Rakuten_)),

`John Lewis_count` = sum(!is.na(`John Lewis_`)),

Otto_count = sum(!is.na(Otto_)),

Noon_count = sum(!is.na(Noon_)),

`Walmart (3rd Party)_count` = sum(!is.na(`Walmart (3rd Party)`)),

`Amazon Vendor Central_count` = sum(!is.na(`Amazon Vendor Central_`)),

`Walmart (Supplier_count` = sum(!is.na(`Walmart (Supplier`)),

Zalando_count = sum(!is.na(Zalando_)),

Tmall_count = sum(!is.na(Tmall)),

)

But this was quite tedious, and I have other data with 50-100 columns. Can someone advise me on an approach to shorten this, such as a loop?

Here is the output of the current code: