I have a program who read information from database. Sometimes, the message is bigger that expected. So, before send that message to my broker I zip it with this code:

public static byte[] zipBytes(byte[] input) throws IOException {

ByteArrayOutputStream bos = new ByteArrayOutputStream(input.length);

OutputStream ej = new DeflaterOutputStream(bos);

ej.write(input);

ej.close();

bos.close();

return bos.toByteArray();

}

Recently, i retrieved a 80M message from DB and when execute my code above just OutOfMemory throw on the "ByteArrayOutputStream" line. My java program just have 512 of memory to do all process and cant give it more memory.

How can I solve this?

This is no a duplicate question. I cant increase heap size memory.

EDIT: This is flow of my java code:

rs = stmt.executeQuery("SELECT data FROM tbl_alotofinfo"); //rs is a valid resulset

while(rs.next()){

byte[] bt = rs.getBytes("data");

if(bt.length > 60) { //only rows with content > 60. I need to know the size of message, so if I use rs.getBinaryStream I cannot know the size, can I?

if(bt.length >= 10000000){

//here I need to zip the bytearray before send it, so

bt = zipBytes(bt); //this method is above

// then publish bt to my broker

} else {

//here publish byte array to my broker

}

}

}

EDIT Ive tried with PipedInputStream and the memory that process consume is same as zipBytes(byte[] input) method.

private InputStream zipInputStream(InputStream in) throws IOException {

PipedInputStream zipped = new PipedInputStream();

PipedOutputStream pipe = new PipedOutputStream(zipped);

new Thread(

() -> {

try(OutputStream zipper = new DeflaterOutputStream(pipe)){

IOUtils.copy(in, zipper);

zipper.flush();

} catch (IOException e) {

IOUtils.closeQuietly(zipped); // close it on error case only

e.printStackTrace();

} finally {

IOUtils.closeQuietly(in);

IOUtils.closeQuietly(zipped);

IOUtils.closeQuietly(pipe);

}

}

).start();

return zipped;

}

How can I compress by Deflate my InputStream?

EDIT

That information is sent to JMS in Universal Messaging Server by Software AG. This use a Nirvana client documentation: https://documentation.softwareag.com/onlinehelp/Rohan/num10-2/10-2_UM_webhelp/um-webhelp/Doc/java/classcom_1_1pcbsys_1_1nirvana_1_1client_1_1n_consume_event.html and data is saved in nConsumeEvent objects and the documentation show us only 2 ways to send that information: nConsumeEvent (String tag, byte[] data) nConsumeEvent (String tag, Document adom)

The code for connection is:

nSessionAttributes nsa = new nSessionAttributes("nsp://127.0.0.1:9000");

MyReconnectHandler rhandler = new MyReconnectHandler();

nSession mySession = nSessionFactory.create(nsa, rhandler);

if(!mySession.isConnected()){

mySession.init();

}

nChannelAttributes chaAtt = new nChannelAttributes();

chaAttr.setName("mychannel"); //This is a topic

nChannel myChannel = mySession.findChannel(chaAtt);

List<nConsumeEvent> messages = new ArrayList<nConsumeEvent>();

rs = stmt.executeQuery("SELECT data FROM tbl_alotofinfo");

while(rs.next){

byte[] bt = rs.getBytes("data");

if(bt.length > 60){

nEventProperties prop = new nEventProperties();

if(bt.length > 10000000){

bt = compressData(bt); //here a need compress data without ByteArrayInputStream

prop.put("isZip", "true");

nConsumeEvent ncon = new nconsumeEvent("1",bt);

ncon.setProperties(prop);

messages.add(ncon);

} else {

prop.put("isZip", "false");

nConsumeEvent ncon = new nconsumeEvent("1",bt);

ncon.setProperties(prop);

messages.add(ncon);

}

}

ntransactionAttributes tatrib = new nTransactionAttributes(myChannel);

nTransaction myTransaction = nTransactionFactory.create(tattrib);

Vector<nConsumeEvent> m = new Vector<nConsumeEvent>(messages);

myTransaction.publish(m);

myTransaction.commit();

}

Because API exection, to the end of the day I need send the information in byte array, but if this is the only one byte array in my code is OK. How can I compress the byte array or InputStream with rs.getBinaryStream() in this implementation?

EDIT

The database server used is PostgreSQL v11.6

EDIT

I've applied the first one solution of @VGR and works fine.

Only one thing, SELECT query is in a while(true) like:

while(true){

rs = stmt.executeQuery("SELECT data FROM tbl_alotofinfo"); //rs is a valid resulset

// all that implementation you know for this entire post

Thread.sleep(10000);

}

So, a SELECT is execute each 3 second. But I've done a test with my program running and the memory just increase in each process. Why? If the information that database return is same in each request, should not the memory keep like first request? Or maybe I forgot close a stream?

while(true){

rs = stmt.executeQuery("SELECT data FROM tbl_alotofinfo"); //rs is a valid resulset

while(rs.next()) {

//byte[] bt = rs.getBytes("data");

byte[] bt;

try (BufferedInputStream source = new BufferedInputStream(

rs.getBinaryStream("data"), 10_000_001)) {

source.mark(this.zip+1);

boolean sendToBroker = true;

boolean needCompression = true;

for (int i = 0; i <= 10_000_000; i++) {

if (source.read() < 0) {

sendToBroker = (i > 60);

needCompression = (i >= this.zip);

break;

}

}

if (sendToBroker) {

nEventProperties prop = new nEventProperties();

// Rewind stream

source.reset();

if (needCompression) {

System.out.println("Tamaño del mensaje mayor al esperado. Comprimiendo mensaje");

ByteArrayOutputStream byteStream = new ByteArrayOutputStream();

try (OutputStream brokerStream = new DeflaterOutputStream(byteStream)) {

IOUtils.copy(source, brokerStream);

}

bt = byteStream.toByteArray();

prop.put("zip", "true");

} else {

bt = IOUtils.toByteArray(source);

}

System.out.println("size: "+bt.length);

prop.put("host", this.host);

nConsumeEvent ncon = new nConsumeEvent(""+rs.getInt("xid"), bt);

ncon.setProperties(prop);

messages.add(ncon);

}

}

}

}

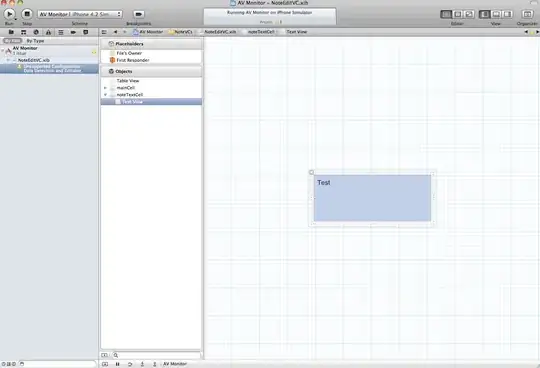

For example, this is the heap memory in two times. First one memory use above 500MB and second one (with the same information of database) used above 1000MB