I am attempting to collect data from a shop in a game ( starbase ) in order to feed the data to a website in order to be able to display them as a candle stick chart

So far I have started using Tesseract OCR 5.0.0 and I have been running into issues as I cannot get the values reliably

I have seen that the images can be pre-processed in order to increase the reliability but I have run into a bottleneck as I am not familiar enough with Tesseract and OpenCV in order to know what to do more

Please note that since this is an in-game UI the images are going to be very constant as there is no colour variations / light changes / font size changes / ... I technically only need to get it to work once and that's it

Here are the steps I have taken so far and the results :

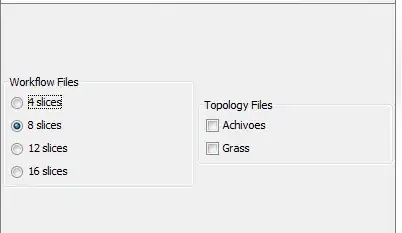

I have started by getting a screen of only the part of the UI I am interested in in order to remove as much clutter as possible

I have then set a threshold as shown here ( I will also be using the cropping part when doing the automation but I am not there yet ), set the language to English and the psm argument to 6 witch gives me the following code :

import cv2

import pytesseract

def clean_text(text):

ret = text.replace("\n\n", "\n") # remove the blank lines

return ret

pytesseract.pytesseract.tesseract_cmd = r'C:\Program Files\Tesseract-OCR\tesseract'

img = cv2.imread('screens/ressources_list_array_1.png', 0)

thresh = 255 - cv2.threshold(img, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)[1]

print("======= Output")

print(clean_text(pytesseract.image_to_string(thresh, lang='eng', config='--psm 6')))

cv2.imshow('thresh', thresh)

cv2.waitKey()

Here is an example of the output I get :

======= Output

Aegisium Ore 4490 456

Ajatite Ore 600 332

Arkanium Ore 84999 53

Bastium Ore 2350 421

Charodium Ore 5 280 366

Corazium Ore 39 896 212

Exorium Ore 5 380 112

Ice 980 141

Karnite Crystal ele) 111

Kutonium Ore 14 000 215

Lukium Ore 31 000 158

Nhurgite Crystal 3144 64

Surtrite Crystal 4198 70

Valkite Ore 545 150

Vokarium Ore 1850 415

Ymrium Ore 69 899 60

There are two main issues :

1 - It is not reliable enough, you can see it confused 6 000 with ele)

2 - it is not properly understanding where the numbers start and end, making the differentiation of the 2 columns difficult

I think I can solve the second issue by further splitting the image into 3 columns but I am unsure if it's not going to be a big hit on CPU / GPU usage witch I would preferably avoid

I also found the documentation of OpenCV that shows all of the possible Image processing methods but there is a lot and I am unsure on witch ones to use to further increase reliability

Any help is much appreciated