I would like to be able to robustly stop a video when the video arrives on some specified frames in order to do oral presentations based on videos made with Blender, Manim...

I'm aware of this question, but the problem is that the video does not stops exactly at the good frame. Sometimes it continues forward for one frame and when I force it to come back to the initial frame we see the video going backward, which is weird. Even worse, if the next frame is completely different (different background...) this will be very visible.

To illustrate my issues, I created a demo project here (just click "next" and see that when the video stops, sometimes it goes backward). The full code is here.

The important part of the code I'm using is:

var video = VideoFrame({

id: 'video',

frameRate: 24,

callback: function(curr_frame) {

// Stops the video when arriving on a frames to stop at.

if (stopFrames.includes(curr_frame)) {

console.log("Automatic stop: found stop frame.");

pauseMyVideo();

// Ensure we are on the proper frame.

video.seekTo({frame: curr_frame});

}

}

});

So far, I avoid this issue by stopping one frame before the end and then using seekTo (not sure how sound this is), as demonstrated here. But as you can see, sometimes when going on the next frame it "freezes" a bit: I guess this is when the stop happens right before the seekTo.

PS: if you know a reliable way in JS to know the number of frames of a given video, I'm also interested.

Concerning the idea to cut the video before hand on the desktop, this could be used... but I had bad experience with that in the past, notably as changing videos sometimes produce some glitches. Also, it can be more complicated to use at it means that the video should be manually cut a lot of time, re-encoded...

EDIT Is there any solution for instance based on WebAssembly (more compatible with old browsers) or Webcodec (more efficient, but not yet wide-spread)? Webcodec seems to allow pretty amazing things, but I'm not sure how to use them for that. I would love to hear solution based on both of them since firefox does not handle webcodec yet. Note that it would be great if audio is not lost in the process. Bonus if I can also make controls appear on request.

EDIT: I'm not sure to understand what's happening here (source)... But it seems to do something close to my need (using webassembly I think) since it manages to play a video in a canvas, with frame... Here is another website that does something close to my need using Webcodec. But I'm not sure how to reliably synchronize sound and video with webcodec.

EDIT: answer to the first question

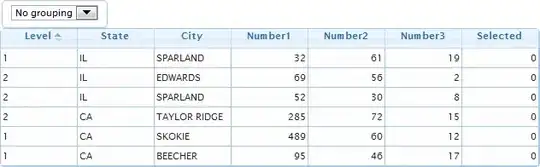

Concerning the video frame, indeed I chose poorly my frame rate, it was 25 not 24. But even by using a framerate of 25, I still don't get a frame-precise stop, on both Firefox and Chromium. For instance, here is a recording (using OBS) of your demo (I see the same with mine when I use 25 instead of 24):

one frame later, see that the butter "fly backward"(this is maybe not very visible with still screenshots, but see for instance the position of the lower left wing in the flowers):

I can see three potential reasons: first (I think it is the most likely reason), I heard that video.currentTime was not always reporting accurately the time, maybe it could explain why here it fails? It seems to be pretty accurate in order to change the current frame (I can go forward and backward by one frame quite reliably as far as I can see), but people reported here that video.currentTime is computed using the audio time and not the video time in Chromium, leading to some inconsistencies (I observe similar inconsistencies in Firefox), and here that it may either lead the time at which the frame is sent to the compositor or at which the frame is actually printed in the compositor (if it is the latest, it could explain the delay we have sometimes). This would also explain why requestAnimationVideoFrame is better, as it also provides the current media time.

The second reason that could explain that problem is that setInterval may not be precise enough... However requestAnimationFrame is not really better (requestAnimationVideoFrame is not available in Firefox) while it should fire 60 times per seconds which should be quick enough.

The third option I can see is that maybe the .pause function is quite long to fire... and that by the end of the call the video also plays another frame. On the other hand, your example using requestAnimationVideoFrame https://mvyom.csb.app/requestFrame.html seems to work pretty reliably, and it's using .pause! Unfortunately it only works in Chromium, but not in firefox. I see that you use metadata.mediaTime instead of currentTime, maybe this is more precise than current time.

The last option is that there is maybe something subtle concerning vsync as explained in this page. It also reports that expectedDisplayTime may help to solve this issue when using requestAnimationVideoFrame.