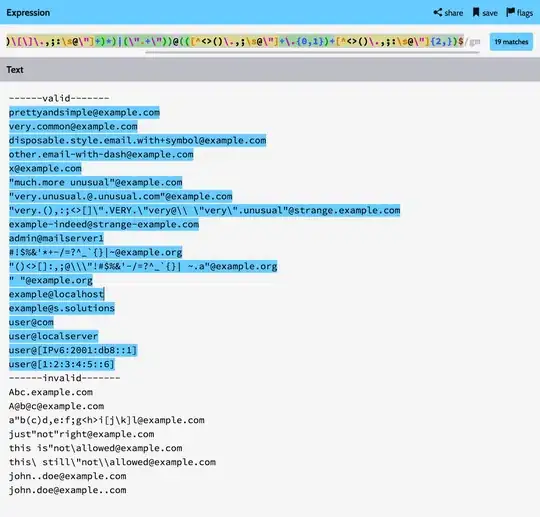

For the entries from this link, I need to click each entry, then crawler url of excel file's path in the left bottom part of page:

How could I achieve that using web scrapy packages in R such as rvest, etc.? Sincere thanks at advance.

library(rvest)

# Start by reading a HTML page with read_html():

common_list <- read_html("http://www.csrc.gov.cn/csrc/c100121/common_list.shtml")

common_list %>%

# extract paragraphs

rvest::html_nodes("a") %>%

# extract text

rvest::html_text() -> webtxt

# inspect

head(webtxt)

First, my question is how could I correctly set html_nodes to get url of each web page?

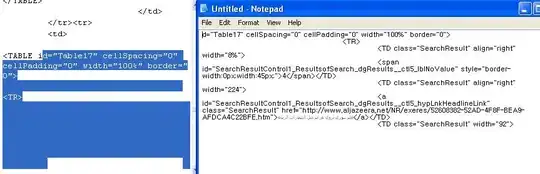

Update:

> driver

$client

[1] "No sessionInfo. Client browser is mostly likely not opened."

$server

PROCESS 'file105483d2b3a.bat', running, pid 37512.

> remDr

$remoteServerAddr

[1] "localhost"

$port

[1] 4567

$browserName

[1] "chrome"

$version

[1] ""

$platform

[1] "ANY"

$javascript

[1] TRUE

$nativeEvents

[1] TRUE

$extraCapabilities

list()

When I run remDr$navigate(url):

Error in checkError(res) :

Undefined error in httr call. httr output: length(url) == 1 is not TRUE