Q : Any ways for preventing memory crash ?

A :

Sure,

similar work-experience since Win-XP crashing Py2.7 n_jobs in the very same direction.

Solution :

Step 1 :

profile the RAM-allocation envelope for having a single n_jobs = 1 set in the otherwise same settings ( one can create, even inside Python-interpreter a SIGNAL-based automated memory monitor / recorder to indeed profile real-virtual-...-memory usage, strobing for any raw or coarse time-quanta in doing so & logging all such data or just a moving-window maximums - this goes beyond the scope of this post, yet one may reuse my other posts on this and use Python-signal.signal(signal.SIGUSR1,...)-tools for the hand-made "monitor-n-logger" )

Step 2 :

having obtained the RAM-allocation & virtual memory usage, from visual inspection or from hard-data collected in Step 1, try to spawn as many n_jobs that fit the physical RAM (minus one, if you use the host for other work, during the such RandomForrestPREDICTOR.fit()-computing - my use-case was using a pool of dedicated, headless hosts for .fit()-training cavalry of predictor models on many machines in embarrasingly parallel orchestration, each having 100% CPU-usage and zero-memory-I/O swaps for about 30+ hours to meet the large-scale re-training & model selection deadlines )

This said, CPU-usage is not your main enemy here, the RAM-efficient computing is.

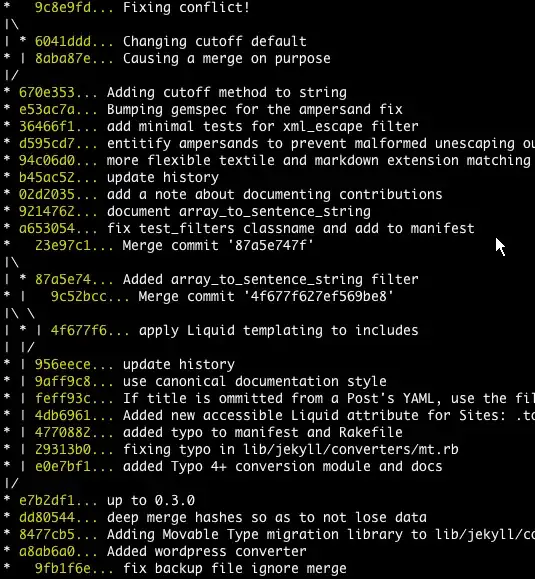

Your graph shows :

- swap-thrashing did not start ( good )

- cpu-hopping did start at a cost of decreasing the cpu-cache data re-use, once a job was moved away, data previously kept in LRU-cache needs to get re-fetched from RAM, which is about 1,000x slower, than if sourcing them from cpu-core local L1d-cache ( thermal throttling on big workloads make cpu-cores hot and the hardware starts to moving jobs from one core to another (hopefully a cooler one))

- cpu workloads are not as hell-hard as they could be (in some even harder number-crunching), as RandomForestPREDICTOR-s are moving and crawling through all the [M,N]-sized ( N being the number of examples in a sub-set elected for the .fit()-training, yet still "long" ) data, during the computation, which gives cpu some time to rest, while waiting for next part of data being fed in. So there are times, where CPU-core is not harnessed to its 100% capacity )