I want to find the number of tree depth, number of leaves actually assigned in my xgboot regression model.

Asked

Active

Viewed 952 times

0

-

https://stackoverflow.com/questions/50426248/how-to-know-the-number-of-tree-created-in-xgboost – user4718221 Jan 15 '22 at 08:40

-

Does this answer your question? [How to know the number of tree created in XGBoost](https://stackoverflow.com/questions/50426248/how-to-know-the-number-of-tree-created-in-xgboost) – user4718221 Jan 15 '22 at 08:41

-

(How to know the number of tree created in XGBoost)---> This gives the number of trees in the forest. But I would like to know the depth of each tree. – Arravind J Jan 15 '22 at 09:14

1 Answers

1

To get this information you need to get the booster object back, I assume you are using the scikit-learn interface, so for example using a model with 3 estimators (trees) and a maximum depth of 7:

import xgboost as xgb

from sklearn.datasets import make_classification

X,y = make_classification(random_state=99)

clf = xgb.XGBModel(objective='binary:logistic',n_estimators = 3,max_depth = 7)

clf.fit(X,y)

In this case, we pull out the object and also covert the tree information to a dataframe:

booster = clf.get_booster()

tree_df = booster.trees_to_dataframe()

tree_df[tree_df['Tree'] == 0]

Tree Node ID Feature Split Yes No Missing Gain Cover

0 0 0 0-0 f11 -0.233068 0-1 0-2 0-1 48.161629 25.00

1 0 1 0-1 f1 -1.081945 0-3 0-4 0-3 0.054384 9.25

2 0 2 0-2 f14 0.480458 0-5 0-6 0-5 8.410727 15.75

3 0 3 0-3 Leaf NaN NaN NaN NaN -0.150000 1.00

4 0 4 0-4 Leaf NaN NaN NaN NaN -0.535135 8.25

5 0 5 0-5 f18 0.261421 0-7 0-8 0-7 5.638095 6.50

6 0 6 0-6 f9 -1.585489 0-9 0-10 0-9 0.727795 9.25

7 0 7 0-7 f18 -0.640538 0-11 0-12 0-11 4.342857 4.00

8 0 8 0-8 f0 0.072811 0-13 0-14 0-13 1.028571 2.50

9 0 9 0-9 Leaf NaN NaN NaN NaN 0.163636 1.75

10 0 10 0-10 Leaf NaN NaN NaN NaN 0.529412 7.50

11 0 11 0-11 Leaf NaN NaN NaN NaN -0.120000 1.50

12 0 12 0-12 Leaf NaN NaN NaN NaN 0.428571 2.50

13 0 13 0-13 Leaf NaN NaN NaN NaN -0.000000 1.00

14 0 14 0-14 Leaf NaN NaN NaN NaN -0.360000 1.50

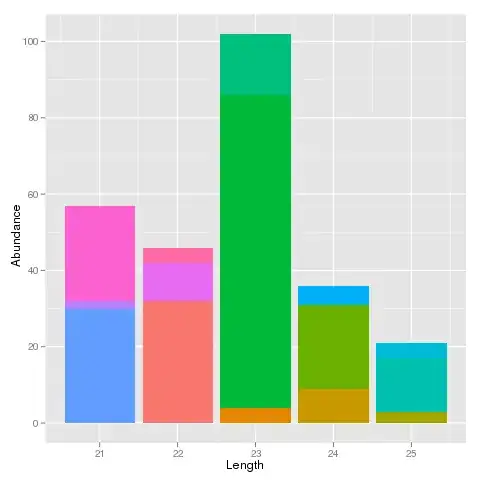

Visualizing the first tree. The depth of a decision tree is the number of splits from a root to a leaf, so this tree has a depth of 4 :

xgb.plotting.plot_tree(booster, num_trees=0)

Maybe there's a better solution, but quickly I used the solution from this post, to iterate through the json output and calculate the depth of each tree:

def item_generator(json_input, lookup_key):

if isinstance(json_input, dict):

for k, v in json_input.items():

if k == lookup_key:

yield v

else:

yield from item_generator(v, lookup_key)

elif isinstance(json_input, list):

for item in json_input:

yield from item_generator(item, lookup_key)

def tree_depth(json_text):

json_input = json.loads(json_text)

return max(list(item_generator(json_input, 'depth'))) + 1

[tree_depth(x) for x in booster.get_dump(dump_format = "json")]

[4, 4, 5]

StupidWolf

- 45,075

- 17

- 40

- 72

-

Thank you for your answer. I am using XGBoost regressor, it says attribute error. (No attribute 'get_dump' in XGBoost regressor). Also, for tree to data frame conversion I used (booster.get_booster().trees_to_dataframe()) – Arravind J Jan 17 '22 at 03:39

-

I think that since each node is only split by 2, we can take the `np.log2` of the number of nodes per tree, no? – Itamar Mushkin Dec 27 '22 at 09:40