I'm trying to plot my data on a log-log scale, using Theil-Sen regression for the best fit line. However, when I plot work out my regression line on a log-scale 2, it's parallel to my x=y line, which I don't think is correct.

normal scale for X and y :

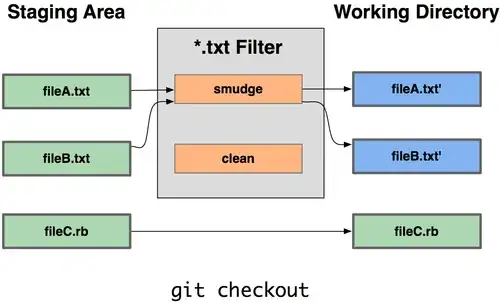

log-log scale for X and y :

I found a related solution by chaooder for Linear Regression on a semi-log scale to be somewhat helpful. So currently, my regression line would go from being:

y = ax + c on a linear scale to y = 10^^(a log(x)+c) on my log-log scale. But in my head, I can't understand how that has a solution as I cannot calculate a.

Here's the data:

index,x,y

0,0.22,0.26

1,0.39,0.1

2,0.4,0.17

3,0.56,0.41

4,0.57,0.12

5,0.62,0.54

6,0.78,0.99

7,0.79,0.35

8,0.8,0.33

9,0.83,0.91

10,0.95,0.81

11,1.08,0.23

12,1.34,0.11

13,1.34,0.44

14,1.35,0.11

15,1.58,0.24

16,1.66,0.71

17,2.11,0.54

18,2.13,0.42

19,2.19,1.72

20,2.25,2.16

21,2.39,0.95

22,2.4,0.16

23,2.73,0.92

24,2.87,1.1

25,2.96,0.27

26,3.12,1.66

27,3.26,0.06

28,3.28,0.68

29,3.34,0.7

30,3.38,1.14

31,3.39,1.81

32,3.41,0.19

33,3.49,1.4

34,3.52,1.57

35,3.6,0.99

36,3.64,1.28

37,3.65,1.68

38,3.89,1.66

39,3.93,1.64

40,4.01,1.04

41,4.07,0.32

42,4.22,0.68

43,4.52,0.57

44,4.53,0.59

45,4.56,0.7

46,4.6,1.15

47,4.62,1.31

48,4.68,1.09

49,5.03,0.48

50,5.06,0.7

51,5.31,0.62

52,5.41,0.21

53,5.45,2.06

54,6.0,0.72

55,6.06,0.36

56,6.64,1.41

57,6.74,0.59

58,6.96,0.95

59,7.01,1.13

60,7.14,1.56

61,7.14,2.82

62,7.19,1.49

63,7.21,0.88

64,7.23,1.31

65,7.55,0.76

66,7.72,0.5

67,7.75,1.65

68,7.77,1.48

69,7.9,1.8

70,7.95,0.68

71,8.03,1.12

72,8.09,2.61

73,8.86,1.71

74,9.31,0.23

75,9.5,2.35

76,9.62,1.84

77,9.91,0.56

78,9.95,1.67

79,10.4,1.15

80,10.8,0.88

81,11.28,1.8

82,11.31,1.58

83,11.43,1.0

84,12.38,2.83

85,13.38,1.45

86,13.9,1.99

87,30.3,1.99

And my current code:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.ticker import AutoMinorLocator

from sklearn.linear_model import TheilSenRegressor

for log in [True, False]:

fig,ax = plt.subplots()

data.plot.scatter(ax=ax,

x='x',

y='y',

loglog=log)

vmin = np.amin(data[['x','y']].min().values)*0.8

vmax = np.amax(data[['x','y']].max().values)*1.25

ax.set_xlim(vmin,vmax)

ax.set_ylim(vmin,vmax)

ax.yaxis.set_minor_locator(AutoMinorLocator())

ax.xaxis.set_minor_locator(AutoMinorLocator())

# best fit (ThielSen) line

X = data.x.values[:,np.newaxis]

y = data.y.values

if log:

X = np.log10(X)

y = np.log10(y)

if len(y) > 0:

estimator = TheilSenRegressor(fit_intercept=False) # intentionally set intercept to 0

estimator.fit(X=X,y=y)

y0 = y[0]

x0 = X[0]

y_pred = estimator.predict(np.array([vmin,vmax]).reshape(2,1))

# y_pred = np.power(10,(estimator.predict(X)))

gradient = (y_pred[1] - y_pred[0]) / (vmax - vmin)

intercept = y_pred[1] - gradient * vmax

print(f'gradient: {gradient} \n intercept: {intercept}')

# Theil-Sen regression line

ax.plot([vmin,vmax],y_pred,color='red',lw=1,zorder=1,label='Best fit')

# 1:1 ratio line (black, dashed)

ax.plot([vmin,vmax],[vmin,vmax],lw=1,color='black',ls='--',alpha=0.6,zorder=1,

label='1:1 correlation')

if log:

ax.set_xscale('log');ax.set_yscale('log')

ax.set_title('log-log scale')

fig.savefig('TS_regression_loglog.png')

else:

ax.set_title('normal scale')

fig.savefig('TS_regression_normalscale.png')