If you plan to use the SpatialDropout1D layer, it has to receive a 3D tensor (batch_size, time_steps, features), so adding an additional dimension to your tensor before feeding it to the dropout layer is one option that is perfectly legitimate.

Note, though, that in your case you could use both SpatialDropout1D or Dropout:

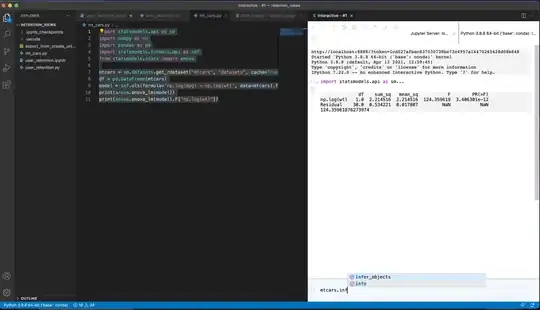

import tensorflow as tf

samples = 2

timesteps = 1

features = 5

x = tf.random.normal((samples, timesteps, features))

s = tf.keras.layers.SpatialDropout1D(0.5)

d = tf.keras.layers.Dropout(0.5)

print(s(x, training=True))

print(d(x, training=True))

tf.Tensor(

[[[-0.5976591 1.481788 0. 0. 0. ]]

[[ 0. -4.6607018 -0. 0.7036132 0. ]]], shape=(2, 1, 5), dtype=float32)

tf.Tensor(

[[[-0.5976591 1.481788 0.5662646 2.8400114 0.9111476]]

[[ 0. -0. -0. 0.7036132 0. ]]], shape=(2, 1, 5), dtype=float32)

I think that SpatialDropout1D layers are most suitable after CNN layers.