We run on-premise small K8s cluster (based on RKE stack). 1x etcd/control node, 2x worker nodes. Components are:

- OS: Centos 7

- Docker version: 19.3.9

- K8s: 1.17.2

Other, important fact: we're using Rook-Ceph storage cluster on both worker nodes (rook: v1.2.4, ceph version 14.2.7).

When one of OS mounts run into 90%+ usage (for example: /var), K8s is reporting "Disk Pressure", disables node and it's OK. But when this happens, the CPU usage start growing up to dozens (for example 30+, 40+ on machine with 4 vCPU), many of container processes (childs to containerd-shim) goes into zombie (defunct) state and whole k8s cluster collapse.

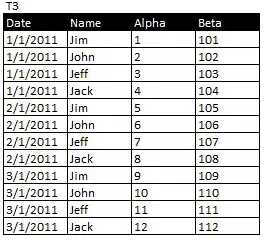

First of all we think that's a Rook-Ceph problem with XFS storage (described at https://github.com/rook/rook/issues/3132#issuecomment-580508760), so we switched to EXT4 (because we cannot do upgrade of kernel to 5.6+), but during last weekend this happened again, and we are sure that this case is related to Disk Pressure event. Last contact with (already) dead node was 21-01, @13:50, but load starts growing at 13:07 and quickly goes to 30.5:

/var usage goes from 89.97% to 90%+ exactly at 13:07 this day:

Can you point us what we need to check in k8s configuration, logs or whatever else to find out what is going on? Why k8s is collapsing during quite normal event?

(For clarification: we know that we're using quite old versions, but we'll do a complex upgrade of environment within few weeks).