I am trying to have a scalable mysql DB whith persistent memory. I thought it was something common, but it seems like online no one really explains it. I am using minikube for my single node cluster. I started off from the kubernetes guide on how to run replicated stateful applications but it does not really get into the persistent volume creation. I have created the configmap like the one in the guide:

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

labels:

app: mysql

data:

primary.cnf: |

# Apply this config only on the primary.

[mysqld]

log-bin

replica.cnf: |

# Apply this config only on replicas.

[mysqld]

super-read-only

And the two serivces:

# Headless service for stable DNS entries of StatefulSet members.

apiVersion: v1

kind: Service

metadata:

name: mysql

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

clusterIP: None

selector:

app: mysql

---

# Client service for connecting to any MySQL instance for reads.

# For writes, you must instead connect to the primary: mysql-0.mysql.

apiVersion: v1

kind: Service

metadata:

name: mysql-read

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

selector:

app: mysql

I needed to initialize the schema of my database, so I made this configmap to pass inside the statefulset:

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql-initdb-config

data:

initdb.sql: |

CREATE DATABASE football;

CREATE TABLE `squadra` (

`name` varchar(15) PRIMARY KEY NOT NULL

);

USE football;

...

I created a storageclass and a persistent volume, thanks to this answer.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-volume

spec:

storageClassName: local-storage

accessModes:

- ReadWriteOnce

capacity:

storage: 5Gi

hostPath:

path: /data/mysql_data/

And made a secret containing the password to the root user of my db:

apiVersion: v1

kind: Secret

metadata:

name: mysql-pass-root

type: kubernetes.io/basic-auth

stringData:

username: root

password: password

My statefulset I have was substantially taken from some answer on stackoverflow I am not finding anymore. It was basically a modification of the one on the kubernetes website. What I added is the database schema initialization, the volumeclaimtemplate and the password from the secret:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

serviceName: mysql

replicas: 2

template:

metadata:

labels:

app: mysql

spec:

initContainers:

- name: init-mysql

image: mysql:5.7

command:

- bash

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

echo [mysqld] > /mnt/conf.d/server-id.cnf

# Add an offset to avoid reserved server-id=0 value.

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# Copy appropriate conf.d files from config-map to emptyDir.

if [[ $ordinal -eq 0 ]]; then

cp /mnt/config-map/primary.cnf /mnt/conf.d/

else

cp /mnt/config-map/replica.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql

image: gcr.io/google-samples/xtrabackup:1.0

command:

- bash

- "-c"

- |

set -ex

# Skip the clone if data already exists.

[[ -d /var/lib/mysql/mysql ]] && exit 0

# Skip the clone on master (ordinal index 0).

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

[[ $ordinal -eq 0 ]] && exit 0

# Clone data from previous peer.

ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql

# Prepare the backup.

xtrabackup --prepare --target-dir=/var/lib/mysql

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pass-root

key: password

- name: MYSQL_USER

value: server

- name: MYSQL_DATABASE

value: medlor

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

- name: mysql-initdb

mountPath: /docker-entrypoint-initdb.d

resources:

requests:

cpu: 500m

memory: 100Mi

livenessProbe:

exec:

command: ["mysqladmin", "-uroot", "-p$MYSQL_ROOT_PASSWORD", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

# Check we can execute queries over TCP (skip-networking is off).

command:

- /bin/sh

- -ec

- >-

mysql -hlocalhost -uroot -p$MYSQL_ROOT_PASSWORD -e'SELECT 1'

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup

image: gcr.io/google-samples/xtrabackup:1.0

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pass-root

key: password

ports:

- name: xtrabackup

containerPort: 3307

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql

# Determine binlog position of cloned data, if any.

if [[ -f xtrabackup_slave_info ]]; then

# XtraBackup already generated a partial "CHANGE MASTER TO" query

# because we're cloning from an existing slave.

mv xtrabackup_slave_info change_master_to.sql.in

# Ignore xtrabackup_binlog_info in this case (it's useless).

rm -f xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then

# We're cloning directly from master. Parse binlog position.

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm xtrabackup_binlog_info

echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in

fi

# Check if we need to complete a clone by starting replication.

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h localhost -uroot -p$MYSQL_ROOT_PASSWORD -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

# In case of container restart, attempt this at-most-once.

mv change_master_to.sql.in change_master_to.sql.orig

mysql -h localhost -uroot -p$MYSQL_ROOT_PASSWORD <<EOF

$(<change_master_to.sql.orig),

MASTER_HOST='mysql-0.mysql',

MASTER_USER='root',

MASTER_PASSWORD='$MYSQL_ROOT_PASSWORD',

MASTER_CONNECT_RETRY=10;

START SLAVE USER='root' PASSWORD='$MYSQL_ROOT_PASSWORD';

EOF

fi

# Start a server to send backups when requested by peers.

exec ncat --listen --keep-open --send-only --max-conns=1 3307 -c \

"xtrabackup --backup --slave-info --stream=xbstream --host=127.0.0.1 --user=root --password=$MYSQL_ROOT_PASSWORD"

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 100m

memory: 500Mi

volumes:

- name: conf

emptyDir: {}

- name: config-map

configMap:

name: mysql

- name: mysql-initdb

configMap:

name: mysql-initdb-config

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "local-storage"

resources:

requests:

storage: 1Gi

When I start up minikube, I run the following commands:

docker exec minikube mkdir data/mysql_data ->in order to create the folder where mysql saves the data inside the minikube container

minikube mount local_path_to_a_folder:/data/mysql_data -> to keep the mysql data in my physical storage too.

kubectl apply -f pv-volume.yaml (the volume I showed before)

kubectl apply -f database\mysql\mysql-configmap.yaml (the configmap from the guide)

kubectl apply -f database\mysql\mysql-initdb-config.yaml (my configmap with the db schema)

kubectl apply -f database\mysql\mysql-secret.yaml (the secret containing the db password)

kubectl apply -f database\mysql\mysql-services.yaml (the two services)

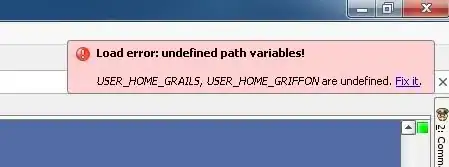

Now, when I run all these commands my MySql pod fails, and this is what I can see from the dashboard:

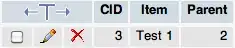

Although in the persistent volumes section I can see my volume has been claimed:

I must have understood something wrong and there must be a logic error in all of this, but I can't figure out what it is. Any help would be extremely appreciated.