I try to achieve performance improvement and made some good experience with SIMD. So far I was using OMP and like to improve my skills further using intrinsics.

In the following scenario, I failed to improve (even vectorize) due to a data dependency of a last_value required for a test of element n+1.

Environment is x64 having AVX2, so want to find a way to vectorize and SIMDfy a function like this.

inline static size_t get_indices_branched(size_t* _vResultIndices, size_t _size, const int8_t* _data) {

size_t index = 0;

int8_t last_value = 0;

for (size_t i = 0; i < _size; ++i) {

if ((_data[i] != 0) && (_data[i] != last_value)) {

// add to _vResultIndices

_vResultIndices[index] = i;

last_value = _data[i];

++index;

}

}

return index;

}

Input is an array of signed 1-byte values. Each element is one of <0,1,-1>. Output is an array of indices to input values (or pointers), signalling a change to 1 or -1.

example in/output

in: { 0,0,1,0,1,1,-1,1, 0,-1,-1,1,0,0,1,1, 1,0,-1,0,0,0,0,0, 0,1,1,1,-1,-1,0,0, ... }

out { 2,6,7,9,11,18,25,28, ... }

My first attempt was, to play with various branchless versions and see, if auto vectorization or OMP were able to translate it into a SIMDish code, by comparing assembly outputs.

example attempt

int8_t* rgLast = (int8_t*)alloca((_size + 1) * sizeof(int8_t));

rgLast[0] = 0;

#pragma omp simd safelen(1)

for (size_t i = 0; i < _size; ++i) {

bool b = (_data[i] != 0) & (_data[i] != rgLast[i]);

_vResultIndices[index] = i;

rgLast[i + 1] = (b * _data[i]) + (!b * rgLast[i]);

index += b;

}

Since no experiment resulted in SIMD output, I started to experiment with intrinsics with the goal to translate the conditional part into a mask.

For the != 0 part that's pretty straight forward:

__m256i* vData = (__m256i*)(_data);

__m256i vHasSignal = _mm256_cmpeq_epi8(vData[i], _mm256_set1_epi8(0)); // elmiminate 0's

The conditional aspect to test against "last flip" I found not yet a way.

To solve the following output packing aspect I assume AVX2 what is the most efficient way to pack left based on a mask? could work.

Update 1

Digging deeper into this topic reveals, it's beneficial to separate the 1/-1's and get rid of the 0's.

Luckily in my case, I can directly grab this from pre-processing and skip processing to <1,0,-1> using _mm256_xor_si256's for example, having 2 input vectors separated as gt0 (all 1's) and lt0 (all -1's). This also allows 4 times tighter packing of data.

I might want to end up with a process like this

The challenge now is how to create the transition mask based on gt0 and lt0 masks.

The challenge now is how to create the transition mask based on gt0 and lt0 masks.

Update 2

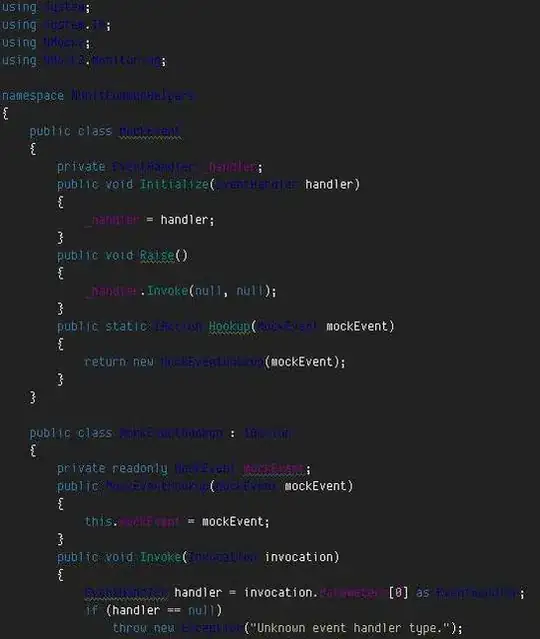

Appearently an approach of splitting 1's and -1's into 2 streams (see in answer how), introduces a dependency while acessing elements for scanning alternating: How to efficiently scan 2 bit masks alternating each iteration

Creation of a transition mask as @aqrit worked out using

transition mask = ((~lt + gt) & lt) | ((~gt + lt) & gt) is possible. Eventhough this adds quite some instructions, it apears to be a beneficial tradeoff for data dependency elimination. I assume the gain grows the larger a register is (might be chip dependent).

Update 3

By vectorizing transition mask = ((~lt + gt) & lt) | ((~gt + lt) & gt)

I could get this output compiled

vmovdqu ymm5,ymmword ptr transition_mask[rax]

vmovdqu ymm4,ymm5

vpandn ymm0,ymm5,ymm6

vpaddb ymm1,ymm0,ymm5

vpand ymm3,ymm1,ymm5

vpandn ymm2,ymm5,ymm6

vpaddb ymm0,ymm2,ymm5

vpand ymm1,ymm0,ymm5

vpor ymm3,ymm1,ymm3

vmovdqu ymmword ptr transition_mask[rax],ymm3

On first look it appears efficient compared to potential condition related pitfalls of post-processing (vertical scan + append to output), although it appears to be right and logical to deal with 2 streams instead of 1.

This lacks the ability to generate the initial state per cycle (transition from 0 to either 1 or -1).

Not sure if there is a way to enhance the transition_mask generation "bit twiddling", or use auto initial _tzcnt_u32(mask0) > _tzcnt_u32(mask1) as Soons uses here: https://stackoverflow.com/a/70890642/18030502 which appears to include a branch.

Conclusion

The approach @aqrit shared using an improved bit-twiddling solution per chunk load to find transitions, turns out to be the most runtime performant. The hot inner loop is just 9 asm instructions long (per 2 found items for comparison with other approaches) using tzcnt and blsr like this

tzcnt rax,rcx

mov qword ptr [rbx+rdx*8],rax

blsr rcx,rcx

tzcnt rax,rcx

mov qword ptr [rbx+rdx*8+8],rax

blsr rcx,rcx

add rdx,2

cmp rdx,r8

jl main+2580h (...)