Besides the solutions given in the comments, you can use Nsight Compute to profile your kernels. You can try its CLI and then see the results in its GUI, e.g.:

ncu --export output --force-overwrite --target-processes application-only \

--replay-mode kernel --kernel-regex-base function --launch-skip-before-match 0 \

--section InstructionStats \

--section Occupancy \

--section SchedulerStats \

--section SourceCounters \

--section WarpStateStats \

--sampling-interval auto \

--sampling-max-passes 5 \

--profile-from-start 1 --cache-control all --clock-control base \

--apply-rules yes --import-source no --check-exit-code yes \

your-appication [arguments]

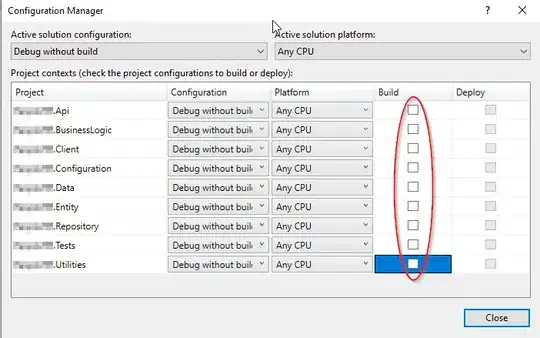

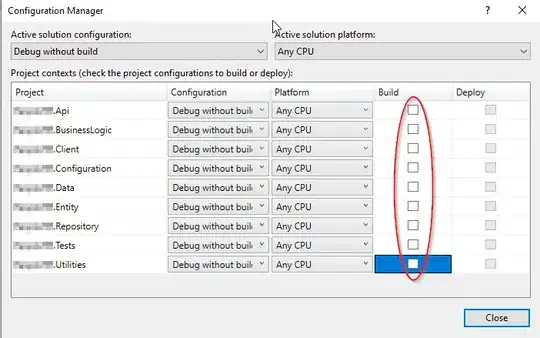

Then, in its GUI you can see some useful information. For example, in the section source counters you can see something like this: