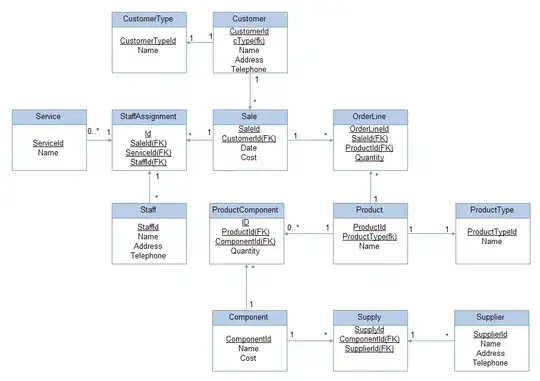

In a similar way than in this related question, I am trying to render complex shapes by the mean of ray-tracing inside a cube: This is, 12 triangles are used to generate a bounding box and each fragment is used to render the given shape by ray-tracing.

For this example, I am using the easiest shape: a sphere.

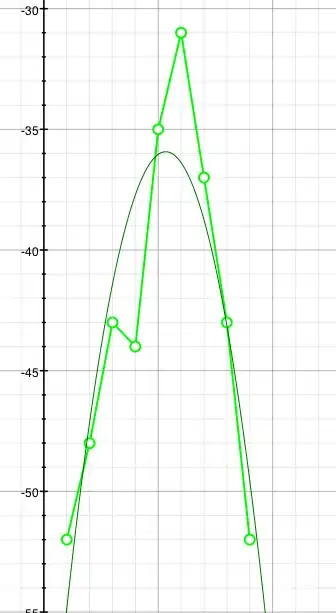

The problem is that when the cube is rotated, different triangle angles are distording the sphere:

What I have attempted so far:

I tried making the raytracing in World space, also in View-space as suggested in the related question.

I checked that the worldCoordinate of the fragment is correct, by making a reverse projection from gl_fragCoord, with the same output.

I switched to orthographic projection, where the distortion is reversed:

My conclusion is that, as described in the related question, the interpolant of the coordinates and the projection are the origin of the problem.

I could project the cube to a plane perpendicular to the camera direction, but I would like to understand the bottom of the question.

Related code:

Vertex shader:

#version 420 core

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

in vec3 in_Position;

in vec3 in_Normal;

out Vertex

{

vec4 worldCoord;

vec4 worldNormal;

} v;

void main(void)

{

mat4 mv = view * model;

// Position

v.worldCoord = model * vec4(in_Position, 1.0);

gl_Position = projection * mv * vec4(in_Position, 1.0);

// Normal

v.worldNormal = normalize(vec4(in_Normal, 0.0));

}

Fragment shader:

#version 420 core

uniform mat4 view;

uniform vec3 cameraPosView;

in Vertex

{

vec4 worldCoord;

vec4 worldNormal;

} v;

out vec4 out_Color;

bool sphereIntersection(vec4 rayOrig, vec4 rayDirNorm, vec4 spherePos, float radius, out float t_out)

{

float r2 = radius * radius;

vec4 L = spherePos - rayOrig;

float tca = dot(L, rayDirNorm);

float d2 = dot(L, L) - tca * tca;

if(d2 > r2)

{

return false;

}

float thc = sqrt(r2 - d2);

float t0 = tca - thc;

float t1 = tca + thc;

if (t0 > 0)

{

t_out = t0;

return true;

}

if (t1 > 0)

{

t_out = t1;

return true;

}

return false;

}

void main()

{

vec3 color = vec3(1);

vec4 spherePos = vec4(0.0, 0.0, 0.0, 1.0);

float radius = 1.0;

float t_out=0.0;

vec4 cord = v.worldCoord;

vec4 rayOrig = (inverse(view) * vec4(-cameraPosView, 1.0));

vec4 rayDir = normalize(cord-rayOrig);

if (sphereIntersection(rayOrig, rayDir, spherePos, 0.3, t_out))

{

out_Color = vec4(1.0);

}

else

{

discard;

}

}