I'm using AWS CloudWatch PutLogEvents to store client side logs into the CloudWatch and I'm using this reference

https://docs.aws.amazon.com/AmazonCloudWatchLogs/latest/APIReference/API_PutLogEvents.html

Below is the sample implementation that I'm using for the PutLogEvents and I'm using a foreach to push the list of InputLogEvents into the CloudWatch.

After continuously pushing logs for about one or two hours the service gets crashed and gets out of memory error. But when I run the application locally it won't get crashed and run more than one or two hours. But I haven't continuously run it on locally to check the crashing time. I was wondering is there any memory leak issue with AWS CloudWatch implementation. Because before the implementation the service works for months without any heap issues.

Here is my service implementation and through the controller, I'm calling this putLogEvents method. My only suspect area is the cwLogDto for each loop and in there I'm assigning the inputLogEvent as null. So it should be garbage collected. Anyway, I have tested this without sending the list of dtos and still, I'm getting the same OOM error.

@Override

public TransportDto putLogEvents(List<CWLogDto> cwLogDtos, UserType userType) throws Exception {

TransportDto transportDto = new TransportDto();

CloudWatchLogsClient logsClient = CloudWatchLogsClient.builder().region(Region.of(region))

.build();

PutLogEventsResponse putLogEventsResponse = putCWLogEvents(logsClient, userType, cwLogDtos);

logsClient.close();

transportDto.setResponse(putLogEventsResponse.sdkHttpResponse());

return transportDto;

}

private PutLogEventsResponse putCWLogEvents(CloudWatchLogsClient logsClient, UserType userType, List<CWLogDto> cwLogDtos) throws Exception{

DateTimeFormatter formatter = DateTimeFormatter.ofPattern(logStreamPattern);

String streamName = LocalDateTime.now().format(formatter);

String logGroupName = logGroupOne;

if(userType.equals(UserType.TWO))

logGroupName =logGroupTwo;

log.info("Total Memory before (in bytes): {}" , Runtime.getRuntime().totalMemory());

log.info("Free Memory before (in bytes): {}" , Runtime.getRuntime().freeMemory());

log.info("Max Memory before (in bytes): {}" , Runtime.getRuntime().maxMemory());

DescribeLogStreamsRequest logStreamRequest = DescribeLogStreamsRequest.builder()

.logGroupName(logGroupName)

.logStreamNamePrefix(streamName)

.build();

DescribeLogStreamsResponse describeLogStreamsResponse = logsClient.describeLogStreams(logStreamRequest);

// Assume that a single stream is returned since a specific stream name was specified in the previous request. if not will create a new stream

String sequenceToken = null;

if(!describeLogStreamsResponse.logStreams().isEmpty()){

sequenceToken = describeLogStreamsResponse.logStreams().get(0).uploadSequenceToken();

describeLogStreamsResponse = null;

}

else{

CreateLogStreamRequest request = CreateLogStreamRequest.builder()

.logGroupName(logGroupName)

.logStreamName(streamName)

.build();

logsClient.createLogStream(request);

request = null;

}

// Build an input log message to put to CloudWatch.

List<InputLogEvent> inputLogEventList = new ArrayList<>();

for (CWLogDto cwLogDto : cwLogDtos) {

InputLogEvent inputLogEvent = InputLogEvent.builder()

.message(new ObjectMapper().writeValueAsString(cwLogDto))

.timestamp(System.currentTimeMillis())

.build();

inputLogEventList.add(inputLogEvent);

inputLogEvent = null;

}

log.info("Total Memory after (in bytes): {}" , Runtime.getRuntime().totalMemory());

log.info("Free Memory after (in bytes): {}" , Runtime.getRuntime().freeMemory());

log.info("Max Memory after (in bytes): {}" , Runtime.getRuntime().maxMemory());

// Specify the request parameters.

// Sequence token is required so that the log can be written to the

// latest location in the stream.

PutLogEventsRequest putLogEventsRequest = PutLogEventsRequest.builder()

.logEvents(inputLogEventList)

.logGroupName(logGroupName)

.logStreamName(streamName)

.sequenceToken(sequenceToken)

.build();

inputLogEventList = null;

logStreamRequest = null;

return logsClient.putLogEvents(putLogEventsRequest);

}

CWLogDto

@Data

@JsonInclude(JsonInclude.Include.NON_NULL)

public class CWLogDto {

private Long userId;

private UserType userType;

private LocalDateTime timeStamp;

private Integer logLevel;

private String logType;

private String source;

private String message;

}

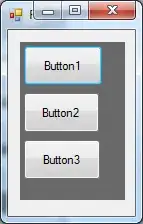

Heap dump summary

Any help will be greatly appreciated.