I use python3 to do some encrypted calculation with MICROSOFT SEAL and is looking for some performance improvement. I do it by:

- create a shared memory to hold the plaintext data (Use numpy array in shared memory for multiprocessing)

- start multiple processes with multiprocessing.Process (there is a param controlling the number of processes, thus limiting the cpu usage)

- processes read from shared memory and do some encrypted calculation

- wait for calculation ends and join processes

I run this program on a 32U64G x86 linux server, cpu model is: Intel(R) Xeon(R) Gold 6161 CPU @ 2.20GHz.

I notice that if I double the number of processes there is only about 20% time cost improvement. I've tried three kinds of process nums:

| process nums | 7 | 13 | 27 |

| time ratio | 0.8 | 1 | 1.2 |

Why is this improvement disproportionate to the resources i use (cpu & memory)? Conceptual knowledge or specific linux cmdlines are both welcome. Thanks.

FYI:

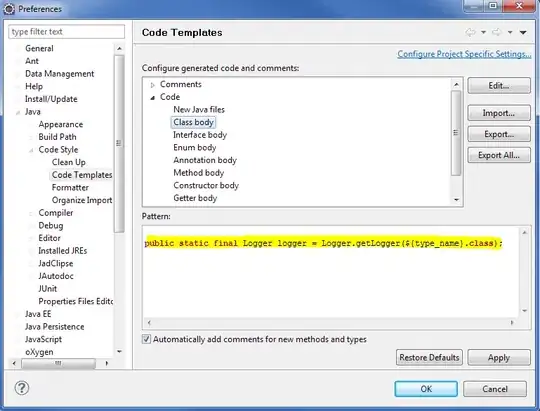

My code of sub processes is like:

def sub_process_main(encrypted_bytes, plaintext_array, result_queue):

// init

// status_sign

while shared_int > 0:

// seal load and some other calculation

encrypted_matrix_list = seal.Ciphertext.load(encrypted_bytes)

shared_plaintext_matrix = seal.Encoder.encode(plaintext_array)

// ... do something

for some loop:

time1 = time.time()

res = []

for i in range(len(encrypted_matrix_list)):

enc = seal.evaluator.multiply_plain(encrypted_matrix_list[i], shared_plaintext_matrix[i])

res.append(enc)

time2 = time.time()

print(f'time usage: {time2 - time1}')

// ... do something

result_queue.put(final_result)

I actually print the time for every part of my code and here is the time cost for this part of code.

| process nums | 13 | 27 |

| occurrence | 1791 | 864 |

| total time | 1698.2140 | 1162.8330 |

| average | 0.9482 | 1.3459 |

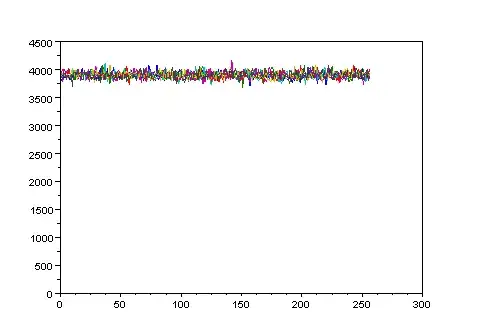

I've monitored some metrics but I don't know if there are any abnormal ones.

13 cores:

top

pidstat

vmstat

27 cores:

top (Why is this using all cores rather than exactly 27 cores? Does it have anything to do with Hyper-Threading?)

pidstat

vmstat