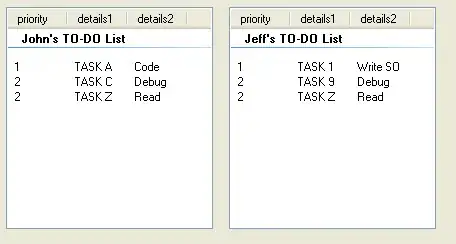

I am having the same problem as this post. I followed @B.M.'s code. However, I am not able to figure out how to fit two gaussian distributions in my data. When I hist-plot it and superimpose it with the KDE, I obtain the second peak like this:

Second peak detected by the slight bump at ~0.07 units

Here are my efforts so far:

from pylab import *

from scipy.optimize import curve_fit

#data=concatenate((normal(1,.2,5000),normal(2,.2,2500)))

data = pd.read_csv('gmm_outdata_ngc2173.csv')

gmm_3 = data['col3']

y,x,_=hist(gmm_3,22,alpha=.3,label='data')

x=(x[1:]+x[:-1])/2 # for len(x)==len(y)

def gauss(x,mu,sigma,A):

return A*exp(-(x-mu)**2/2/sigma**2)

def bimodal(x,mu1,sigma1,A1,mu2,sigma2,A2):

return gauss(x,mu1,sigma1,A1)+gauss(x,mu2,sigma2,A2)

expected=(-0.15,.02,22,0.15,.02,22)

params,cov=curve_fit(bimodal,x,y,expected)

sigma=sqrt(diag(cov))

plot(x,bimodal(x,*params),color='red',lw=3,label='model')

legend()

print(params,'\n',sigma)

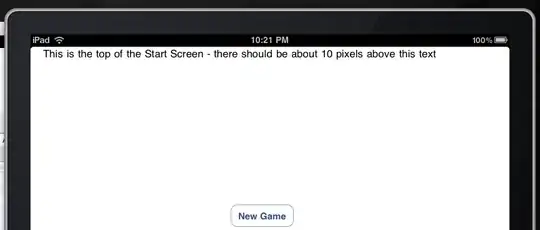

Here is the output plot:

Output plot of fitting two Gaussian Distributions within the same dataset

Here's the behaviour I'm expecting, a bimodal behaviour from emcee and mpfit implementation.

Plot with emcee and mpfit

Any idea why that might be happening? Thanks in advance.

Edit:

Based on Mr. T's helpful suggestions, I have the following result:

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from scipy.optimize import curve_fit

np.random.seed(123)

#data=np.concatenate((np.random.normal(1, .2, 5000), np.random.normal(1.6, .3, 2500)))

data = pd.read_csv('gmm_outdata_ngc2173.csv')

gmm_3 = data['col3']

y,x,_=plt.hist(gmm_3, 22, alpha=.3, label='data')

x=(x[1:]+x[:-1])/2 # for len(x)==len(y)

#%%

def gauss(x, mu, sigma, A):

return A*np.exp(-(x-mu)**2/2/sigma**2)

def bimodal(x, mu1, sigma1, A1, mu2, sigma2, A2):

return gauss(x,mu1,sigma1,A1)+gauss(x,mu2,sigma2,A2)

#expected = (1, .2, 250, 2, .2, 125)

expected=(-0.15,.02,22,0.15,.02,22)

params, cov = curve_fit(bimodal, x, y, expected)

sigma=np.sqrt(np.diag(cov))

x_fit = np.linspace(x.min(), x.max(), 500)

plt.plot(x_fit, bimodal(x_fit, *params), color='red', lw=3, label='model')

plt.plot(x_fit, gauss(x_fit, *params[:3]), color='red', lw=1, ls="--", label='distribution 1')

plt.plot(x_fit, gauss(x_fit, *params[3:]), color='red', lw=1, ls=":", label='distribution 2')

plt.legend()

#print(pd.DataFrame(data={'params': params, 'sigma': sigma}, index=bimodal.__code__.co_varnames[1:]))

plt.show()

When I try to change "*params[3:]", I get an error gauss() missing 1 required positional argument: 'A'. Mr. T's code's output. Edit 2:

I am uploading the data I am working on. Please have a look here.