I need to use htons() in my code to convert the little-endian ordered short to a network (big-endian) byte order. I have this code:

int PacketInHandshake::serialize(SOCKET connectSocket, BYTE* outBuffer, ULONG outBufferLength) {

memset(outBuffer, 0, outBufferLength);

const int sizeOfShort = sizeof(u_short);

u_short userNameLength = (u_short)strlen(userName);

u_short osVersionLength = (u_short)strlen(osVersion);

int dataLength = 1 + (sizeOfShort * 2) + userNameLength + osVersionLength;

outBuffer[0] = id;

outBuffer[1] = htons(userNameLength);// htons() here

printf("u_short byte 1: %c%c%c%c%c%c%c%c\n", BYTE_TO_BINARY(outBuffer[1]));

printf("u_short byte 2: %c%c%c%c%c%c%c%c\n", BYTE_TO_BINARY(outBuffer[2]));

for (int i = 0; i < userNameLength; i++) {

outBuffer[1 + sizeOfShort + i] = userName[i];

}

outBuffer[1 + sizeOfShort + userNameLength] = htons(osVersionLength);// and here

for (int i = 0; i < osVersionLength; i++) {

outBuffer[1 + (sizeOfShort * 2) + userNameLength + i] = osVersion[i];

}

int result;

result = send(connectSocket, (char*)outBuffer, dataLength, 0);

if (result == SOCKET_ERROR) {

printf("send failed with error: %d\n", WSAGetLastError());

}

printf("PacketInHandshake sent: %ld bytes\n", result);

return result;

}

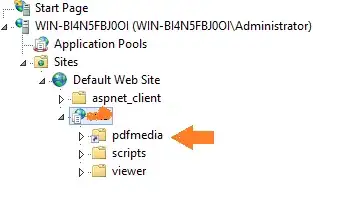

Which results in a packet like this to be sent:

As you see, the length indication bytes where htons() is used are all zeros, where they should be 00 07 and 00 16 respectively.

And this is the console output:

u_short byte 1: 00000000

u_short byte 2: 00000000

PacketInHandshake sent: 34 bytes

If I remove the htons() and just put the u_shorts in the buffer as they are, everything is as expected, little-endian ordered:

u_short byte 1: 00000111

u_short byte 2: 00000000

PacketInHandshake sent: 34 bytes

So what am I doing wrong?