I am building in Python a credit scorecard using this public dataset: https://www.kaggle.com/sivakrishna3311/delinquency-telecom-dataset

It's a binary classification problem:

Target = 1 -> Good applicant

Target = 0 -> Bad applicant

I only have numeric continuous predictive characteristics.

In the credit industry it is a legal requirement to explain why an applicant got rejected (or didn't even get the maximum score): to meet that requirement, Adverse Codes are produced.

In a classic logistic regression approach, one would do this:

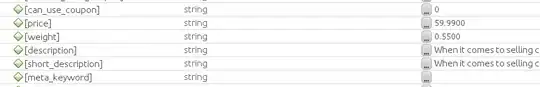

- calculate the Weight-of-Evidence (WoE) for each predictive characteristic (forcing a monotonic relationship between the feature values and the WoE or log(odds)). In the following example, the higher the network Age the higher the Weight-of-Evidence (WoE):

- replace the data values with the correspondent Weight-of-Evidence. For example, a value of 250 for Network Age would be replaced by 0.04 (which is the correspondent WoE).

- Train a logistic regression

After some linear transformations you'd get something like this:

And therefore it'd be straightforward to assign the Adverse Codes, so that the bin with the maximum score doesn't return an Adverse Code. For example:

Now, I want to train an XGBoost (which typically outperforms a logistic regression on a imbalanced, low noise data). XGBoost are very predictive but need to be explained (typically via SHAP).

What I have read is that in order to make the model decision explainable you must ensure that the monotonic constraints are applied.

Question 1. Does it mean that I need to train the XGBoost on the Weight-of-Evidence transformed data like it's done with the Logistic Regression (see point 2 above)?

Question 2. In Python, the XGBoost package offers the option to set monotonic constraints (via the monotone_constraints option). If I don't transform the data by replacing the Weight-of-Evidence (therefore removing all monotonic constraints) does it still make sense to use "monotone_constraints" in XGboost for a binary problem? I mean, does it make sense to use monotone_constraints with a XGBClassifier at all?

Thanks.