A relatively simple way to save and view the image is writing Y, U and V (planar) data to binary file, and using FFmpeg CLI to convert the binary file to RGB.

Some background:

yuvj420p in FFmpeg (libav) terminology applies YUV420 "full range" format.

I suppose the j in yuvj comes from JPEG - JPEG images uses "full range" YUV420 format.

Most of the video files use "limited range" (or TV range) YUV format.

- In "limited range", Y range is [16, 235], U range is [16, 240] and V range is [0, 240].

- In "full range", Y range is [0, 255], U range is [0, 255] and V range is [0, 255].

yuvj420p is deprecated, and supposed to be marked using yuv420p combined with dst_range 1 (or src_range 1) in FFmpeg CLI. I never looked for a way to define "full range" in C.

yuvj420p in FFmpeg (libav) applies "planar" format.

Separate planes for Y channel, for U channel and for V channel.

Y plane is given in full resolution, and U, V are down-scaled by a factor of x2 in each axis.

Illustration:

Y - data[0]: YYYYYYYYYYYY

YYYYYYYYYYYY

YYYYYYYYYYYY

YYYYYYYYYYYY

U - data[1]: UUUUUU

UUUUUU

UUUUUU

V - data[2]: VVVVVV

VVVVVV

VVVVVV

In C, each "plane" is stored in a separate buffer in memory.

When writing the data to a binary file, we may simply write the buffers to the file one after the other.

For demonstration, I am reusing my following answer.

I copied and paste the complete answer, and replaced YUV420 with YUVJ420.

In the example, the input format is NV12 (and I kept it).

The input format is irrelevant (you may ignore it) - only the output format is relevant for your question.

I have created a "self contained" code sample that demonstrates the conversion from NV12 to YUV420 (yuvj420p) using sws_scale.

- Start by building synthetic input frame using FFmpeg (command line tool).

The command creates 320x240 video frame in raw NV12 format:

ffmpeg -y -f lavfi -i testsrc=size=320x240:rate=1 -vcodec rawvideo -pix_fmt nv12 -frames 1 -f rawvideo nv12_image.bin

The next code sample applies the following stages:

- Allocate memory for the source frame (in NV12 format).

- Read NV12 data from binary file (for testing).

- Allocate memory for the destination frame (in YUV420 / yuvj420 format).

- Apply color space conversion (using

sws_scale).

- Write the converted YUV420 (yuvj420) data to binary file (for testing).

Here is the complete code:

//Use extern "C", because the code is built as C++ (cpp file) and not C.

extern "C"

{

#include <libswscale/swscale.h>

#include <libavformat/avformat.h>

#include <libswresample/swresample.h>

#include <libavutil/pixdesc.h>

#include <libavutil/imgutils.h>

}

int main()

{

int width = 320;

int height = 240; //The code sample assumes height is even.

int align = 0;

AVPixelFormat srcPxlFormat = AV_PIX_FMT_NV12;

AVPixelFormat dstPxlFormat = AV_PIX_FMT_YUVJ420P;

int sts;

//Source frame allocation

////////////////////////////////////////////////////////////////////////////

AVFrame* pNV12Frame = av_frame_alloc();

pNV12Frame->format = srcPxlFormat;

pNV12Frame->width = width;

pNV12Frame->height = height;

sts = av_frame_get_buffer(pNV12Frame, align);

if (sts < 0)

{

return -1; //Error!

}

////////////////////////////////////////////////////////////////////////////

//Read NV12 data from binary file (for testing)

////////////////////////////////////////////////////////////////////////////

//Use FFmpeg for building raw NV12 image (used as input).

//ffmpeg -y -f lavfi -i testsrc=size=320x240:rate=1 -vcodec rawvideo -pix_fmt nv12 -frames 1 -f rawvideo nv12_image.bin

FILE* f = fopen("nv12_image.bin", "rb");

if (f == NULL)

{

return -1; //Error!

}

//Read Y channel from nv12_image.bin (Y channel size is width x height).

//Reading row by row is required in rare cases when pNV12Frame->linesize[0] != width

uint8_t* Y = pNV12Frame->data[0]; //Pointer to Y color channel of the NV12 frame.

for (int row = 0; row < height; row++)

{

fread(Y + (uintptr_t)row * pNV12Frame->linesize[0], 1, width, f); //Read row (width pixels) to Y0.

}

//Read UV channel from nv12_image.bin (UV channel size is width x height/2).

uint8_t* UV = pNV12Frame->data[1]; //Pointer to UV color channels of the NV12 frame (ordered as UVUVUVUV...).

for (int row = 0; row < height / 2; row++)

{

fread(UV + (uintptr_t)row * pNV12Frame->linesize[1], 1, width, f); //Read row (width pixels) to UV0.

}

fclose(f);

////////////////////////////////////////////////////////////////////////////

//Destination frame allocation

////////////////////////////////////////////////////////////////////////////

AVFrame* pYUV420Frame = av_frame_alloc();

pYUV420Frame->format = dstPxlFormat;

pYUV420Frame->width = width;

pYUV420Frame->height = height;

sts = av_frame_get_buffer(pYUV420Frame, align);

if (sts < 0)

{

return -1; //Error!

}

////////////////////////////////////////////////////////////////////////////

//Color space conversion

////////////////////////////////////////////////////////////////////////////

SwsContext* sws_context = sws_getContext(width,

height,

srcPxlFormat,

width,

height,

dstPxlFormat,

SWS_FAST_BILINEAR,

NULL,

NULL,

NULL);

if (sws_context == NULL)

{

return -1; //Error!

}

sts = sws_scale(sws_context, //struct SwsContext* c,

pNV12Frame->data, //const uint8_t* const srcSlice[],

pNV12Frame->linesize, //const int srcStride[],

0, //int srcSliceY,

pNV12Frame->height, //int srcSliceH,

pYUV420Frame->data, //uint8_t* const dst[],

pYUV420Frame->linesize); //const int dstStride[]);

if (sts != pYUV420Frame->height)

{

return -1; //Error!

}

////////////////////////////////////////////////////////////////////////////

//Write YUV420 (yuvj420p) data to binary file (for testing)

////////////////////////////////////////////////////////////////////////////

//Use FFmpeg for converting the binary image to PNG after saving the data.

//ffmpeg -y -f rawvideo -video_size 320x240 -pixel_format yuvj420p -i yuvj420_image.bin -pix_fmt rgb24 rgb_image.png

f = fopen("yuvj420_image.bin", "wb");

if (f == NULL)

{

return -1; //Error!

}

//Write Y channel to yuvj420_image.bin (Y channel size is width x height).

//Writing row by row is required in rare cases when pYUV420Frame->linesize[0] != width

Y = pYUV420Frame->data[0]; //Pointer to Y color channel of the YUV420 frame.

for (int row = 0; row < height; row++)

{

fwrite(Y + (uintptr_t)row * pYUV420Frame->linesize[0], 1, width, f); //Write row (width pixels) to file.

}

//Write U channel to yuvj420_image.bin (U channel size is width/2 x height/2).

uint8_t* U = pYUV420Frame->data[1]; //Pointer to U color channels of the YUV420 frame.

for (int row = 0; row < height / 2; row++)

{

fwrite(U + (uintptr_t)row * pYUV420Frame->linesize[1], 1, width / 2, f); //Write row (width/2 pixels) to file.

}

//Write V channel to yuv420_image.bin (V channel size is width/2 x height/2).

uint8_t* V = pYUV420Frame->data[2]; //Pointer to V color channels of the YUV420 frame.

for (int row = 0; row < height / 2; row++)

{

fwrite(V + (uintptr_t)row * pYUV420Frame->linesize[2], 1, width / 2, f); //Write row (width/2 pixels) to file.

}

fclose(f);

////////////////////////////////////////////////////////////////////////////

//Cleanup

////////////////////////////////////////////////////////////////////////////

sws_freeContext(sws_context);

av_frame_free(&pYUV420Frame);

av_frame_free(&pNV12Frame);

////////////////////////////////////////////////////////////////////////////

return 0;

}

The execution shows a warning message (that we may ignore):

[swscaler @ 000002a19227e640] deprecated pixel format used, make sure you did set range correctly

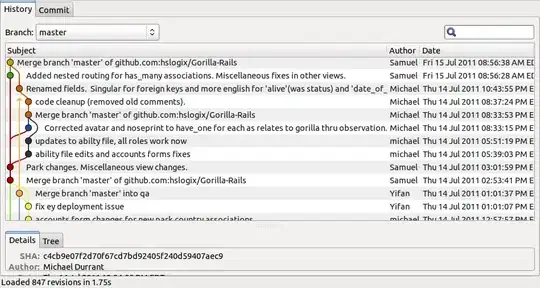

For viewing the output as colored image:

- After executing the code, execute FFmpeg (command line tool).

The following command converts the raw binary frame (in YUV420 / yuvj420p format) to PNG (in RGB format).

ffmpeg -y -f rawvideo -video_size 320x240 -pixel_format yuvj420p -i yuvj420_image.bin -pix_fmt rgb24 rgb_image.png

Sample output (after converting from yuvj420p to PNG image file format):