I am using multinom function from nnet package for multinomial logistic regression. My dataset has 3 features and 14 different classes with total of 1000 observations. Classes that I have is: 0,1,2,3,4,5,6,7,8,9,10,11,13,15

I divide data set into proper training and calibration, where calibration has only only class of labels(say 4). Training set has all classes except 4. Now, I train the model as

modelfit <- multinom(label ~ x1+x2+x3, data = train)

Now, I use calibration data to find predicted probabilities as:

predProb = predict(modelfit, newdata=calib_set, type="prob")

where calib_set has only three features and no column of Y. Then, the predProb gives me the probabilities of all 16 classes except class 11 for all observations in calibration data.

Also, when I use any test data point, I get predicted probabilties of all classes except class 11.

Can, someone explain why is it missing that and how can I get predicted probabilities of all classes?

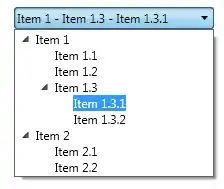

The below picture shows predicted probabiltiies for calibration data, it is missing class 11, (it can miss class 12 and 14 because that are not in the classes) Any suggestions or advices are much appreciated.