What I'm trying to do:

Android Application (ADMIN) that gets job Title from user and fetches all the jobs related to it using Scrapy (Python) which are saved to database through API.

Android Application (CLIENT) that fetches all the data from database through API.

Problem: I'm stuck on how to connect my python Script with Ktor.

Some Code for more clarity:

import spiders.Linkedin as linkedinSpider

linkedinSpider.main(numberOfPages=1, keywords="ios developer", location="US")

This piece of Code works fine and helps me to fetch and store data into database and returns True if saved successfully else False. I just need to call this function and all the work is done for me.

Similarly,

This is how i get data from the user through API from ADMIN Side using KTOR. Also this works fine.

// Fetchs New Jobs.

get("/fetch") {

val numberOfPages = call.request.queryParameters["pages"]?.toInt() ?: 1

val keyword = call.request.queryParameters["keyword"]

val location = call.request.queryParameters["location"]

if (numberOfPages < 1) {

call.respond(

status = HttpStatusCode.BadRequest,

message = Message(message = "Incorrect Page Number.")

)

} else {

call.respond(

status = HttpStatusCode.PK,

message = Message(message = "Process Completed.")

)

}

}

This is how i wanted the code to work logically.

// Fetch New Jobs.

get("/fetch") {

val numberOfPages = call.request.queryParameters["pages"]?.toInt() ?: 1

val keyword = call.request.queryParameters["keyword"]

val location = call.request.queryParameters["location"]

if (numberOfPages < 1) {

call.respond(

status = HttpStatusCode.BadRequest,

message = Message(message = "Incorrect Page Number.")

)

} else {

// TODO: Call Python Function Here and Check the Return Type. Something Like This.

if(linkedinSpider.main(numberOfPages=numberOfPages, keywords=keyword, location=location)) {

call.respond(

status = HttpStatusCode.OK,

message = Message(message = "Process Completed.")

)

} else {

call.respond(

status = HttpStatusCode.InternalServerError,

message = Message(message = "Something Went Wrong.")

)

}

}

}

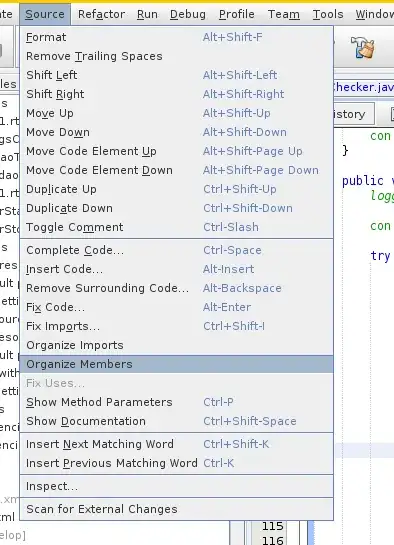

Also these are two different individual projects. Do i need to merge them or something as when i tried it says no python interpreter found in intelliJ which i'm using as an IDE for Ktor Development. Also I tried to configure python interpreter but it seems of no use as it is not able to reference the variables or the python files.

Edit 1

This is what i tried

val processBuilder = ProcessBuilder(

"python3", "main.py"

)

val exitCode = processBuilder.start().waitFor()

if (exitCode == 0) {

println("Process Completed.")

} else {

println("Something Went Wrong.")

}

}

The exitCode i am getting is 2. But when i run the below code it works with exitCode 0.

ProcessBuilder("python3", "--version")

Edit 2

After This the code is working but not terminating and i get no output

This is the kotlin file i made to simulate the problem

package com.bhardwaj.routes

import java.io.File

fun main() {

val processBuilder = ProcessBuilder(

"python3", "main.py", "1", "android", "india"

)

processBuilder.directory(File("src/main/kotlin/com/bhardwaj/"))

val process = processBuilder.start()

val exitCode = process.waitFor()

if (exitCode == 0) {

val output = String(process.inputStream.readBytes())

print("Process Completed -> $output")

} else {

val output = String(process.errorStream.readBytes())

print("Something went wrong -> $output")

}

}

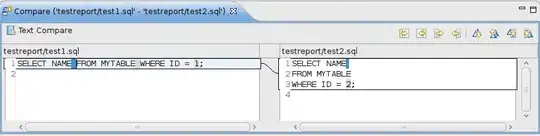

If i run same commands in terminal it works.

python3 src/main/kotlin/com/bhardwaj/main.py 1 "android" "india"

After i run same commands in the Terminal the output is ->

Edit 3

When I stopped default Scrapy logging, the code worked. It seems there is some limit to output stream in Process Builder. Here is what i did.

def __init__(self, number_of_pages=1, keywords="", location="", **kwargs):

super().__init__(**kwargs)

logging.getLogger('scrapy').setLevel(logging.WARNING)

This worked flawlessly in Development mode. But there is something more i want to discuss. When I deployed the same on Heroku and made a get request that gives me 503 service unavailable after 30-40 sec later. But after another 20-30 sec i get my data into database. It's seems to be very confusing why this is happening.

For the flow of the program and clarity -

When i make get request to ktor, it request scrapy(A python program) to scrape data and store it in JSON file. After all the process done it make a request to another endpoint to store all the data to database and after all this it returns and respond the user with the particular status code it should give as output using call.respond in ktor.

Is the issue is due to another request made by the python program to the endpoint or it is related to heroku that we can handle one process at a time. Because there is no issue on development mode i.e. localhost url