Beginning with two pandas dataframes of different shapes, what is the fastest way to select all rows in one dataframe that do not exist in the other (or drop all rows in one dataframe that already exist in the other)? And are the fastest methods different for string-valued columns vs. numeric columns? Operation should be roughly equivalent to the code below

import pandas as pd

string_df1 = pd.DataFrame({'latin':['a', 'b', 'c'],

'greek':['alpha', 'beta', 'gamma']})

string_df2 = pd.DataFrame({'latin':['z', 'c'],

'greek':['omega', 'gamma']})

numeric_df1 = pd.DataFrame({'A':[1, 2, 3],

'B':[1.01, 2.02, 3.03]})

numeric_df2 = pd.DataFrame({'A':[3, 9],

'B':[3.03, 9.09]})

def index_matching_rows(df1, df2, cols_to_match=None):

'''

return index of subset of rows of df1 that are equal to at least one row in df2

'''

if cols_to_match is None:

cols_to_match = df1.columns

df1 = df1.reset_index()

m = df1.merge(df2, on=cols_to_match[0], suffixes=('1','2'))

query = '&'.join(['{0}1 == {0}2'.format(str(c)) for c in cols_to_match[1:]])

m = m.query(query)

return m['index']

print(string_df2.drop(index_matching_rows(string_df2, string_df1)))

print(numeric_df2.drop(index_matching_rows(numeric_df2, numeric_df1)))

output

latin greek

0 z omega

A B

1 9 9.09

some naive performance testing

copies = 10

big_sdf1 = pd.concat([string_df1, string_df1]*copies)

big_sdf2 = pd.concat([string_df2, string_df2]*copies)

big_ndf1 = pd.concat([numeric_df1, numeric_df1]*copies)

big_ndf2 = pd.concat([numeric_df2, numeric_df2]*copies)

%%timeit

big_sdf2.drop(index_matching_rows(big_sdf2, big_sdf1))

# copies = 10: 2.61 ms ± 27.5 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

# copies = 20: 4.44 ms ± 43.8 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

# copies = 30: 18.4 ms ± 132 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

# copies = 40: 74.6 ms ± 453 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

# copies = 100: 19.2 s ± 112 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

%%timeit

big_ndf2.drop(index_matching_rows(big_ndf2, big_ndf1))

# copies = 10: 2.56 ms ± 29.2 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

# copies = 20: 4.38 ms ± 75.4 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

# copies = 30: 18.3 ms ± 194 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

# copies = 40: 76.5 ms ± 1.76 ms per loop (mean ± std. dev. of 7 runs, 10 loops each)

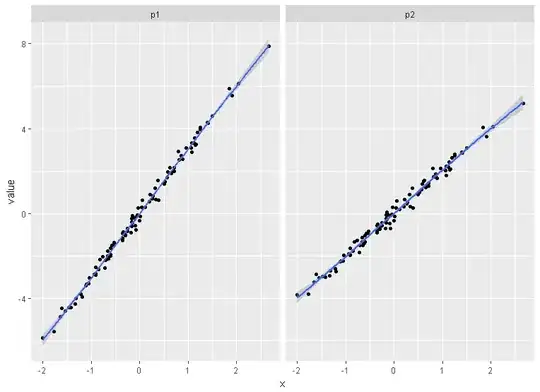

This code runs about as quickly for strings as for numeric data, and I think it's exponential in the length of the dataframe (the curve above is 1.6*exp(0.094x), fit to the string data). I'm working with dataframes that are on the order of 1e5 rows, so this is not a solution for me.

Here's the same performance check for Raymond Kwok's (accepted) answer below in case anyone can beat it later. It's O(n).

%%timeit

big_sdf1_tuples = big_sdf1.apply(tuple, axis=1)

big_sdf2_tuples = big_sdf2.apply(tuple, axis=1)

big_sdf2_tuples.isin(big_sdf1_tuples)

# copies = 100: 4.82 ms ± 22 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

# copies = 1000: 44.6 ms ± 386 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

# copies = 1e4: 450 ms ± 9.44 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

# copies = 1e5: 4.42 s ± 27.6 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

%%timeit

big_ndf1_tuples = big_ndf1.apply(tuple, axis=1)

big_ndf2_tuples = big_ndf2.apply(tuple, axis=1)

big_ndf2_tuples.isin(big_ndf1_tuples)

# copies = 100: 4.98 ms ± 28.6 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

# copies = 1000: 47 ms ± 288 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

# copies = 1e4: 461 ms ± 4.41 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

# copies = 1e5: 4.58 s ± 30.1 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

Indexing into the longest dataframe with

big_sdf2_tuples.loc[~big_sdf2_tuples.isin(big_sdf1_tuples)]

to recover the equivalent of the output in my code above adds about 10 ms.