I would like to look at the compound feature importance of the principal components with DALEX model_parts but I am also interested to what extent the results are driven by variation in a specific variable in this principal component. I can look at individual feature influence very neatly when using model_profile but in that case, I cannot investigate the feature importance of the PCA variables. Is it possible to get the best of both world and look at the compound feature importance of a principal component while using model_profile partial dependence plots of individual factors as shown below?

Data:

library(tidymodels)

library(parsnip)

library(DALEXtra)

set.seed(1)

x1 <- rbinom(1000, 5, .1)

x2 <- rbinom(1000, 5, .4)

x3 <- rbinom(1000, 5, .9)

x4 <- rbinom(1000, 5, .6)

# id <- c(1:1000)

y <- as.factor(rbinom(1000, 5, .5))

df <- tibble(y, x1, x2, x3, x4, id)

df[, c("x1", "x2", "x3", "x4", "id")] <- sapply(df[, c("x1", "x2", "x3", "x4", "id")], as.numeric)

Model

# create training and test set

set.seed(20)

split_dat <- initial_split(df, prop = 0.8)

train <- training(split_dat)

test <- testing(split_dat)

# use cross-validation

kfolds <- vfold_cv(df)

# recipe

rec_pca <- recipe(y ~ ., data = train) %>%

update_role(id, new_role = "id variable") %>%

step_center(all_predictors()) %>%

step_scale(all_predictors()) %>%

step_pca(x1, x2, x3, threshold = 0.9, num_comp = turn_off_pca)

# parsnip engine

boost_model <- boost_tree() %>%

set_mode("classification") %>%

set_engine("xgboost")

# create wf

boosted_wf <-

workflow() %>%

add_model(boost_model) %>%

add_recipe(rec_pca)

final_boosted <- generics::fit(boosted_wf, df)

# create an explanation object

explainer_xgb <- DALEXtra::explain_tidymodels(final_boosted,

data = df[,-1],

y = df$y)

# feature importance

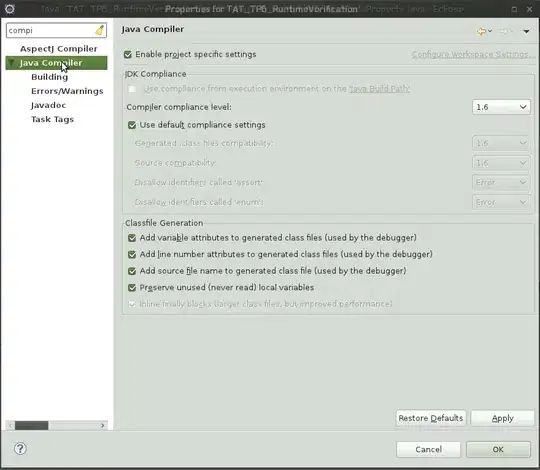

model_parts(explainer_xgb) %>% plot()

This gives me the following plot although even if I have reduced x1, x2 and x3 into one component in step_pca above.

I know I could reduce dimensions manually and bind it to the df like so and then look at the feature importance.

rec_pca_2 <- df %>%

select(x1, x2, x3) %>%

recipe() %>%

step_pca(all_numeric(), num_comp = 1)

df <- bind_cols(df, prep(rec_pca_2) %>% juice())

df

> df

# A tibble: 1,000 × 6

y x1 x2 x3 x4 PC1

<fct> <int> <int> <int> <int> <dbl>

1 2 0 2 4 2 -4.45

2 3 0 3 3 3 -3.95

3 0 0 2 4 4 -4.45

4 2 1 4 5 3 -6.27

5 4 0 1 5 2 -4.94

6 2 1 0 5 1 -4.63

7 3 2 2 5 4 -5.56

8 3 1 2 5 3 -5.45

9 2 1 3 5 2 -5.86

10 2 0 2 5 1 -5.35

# … with 990 more rows

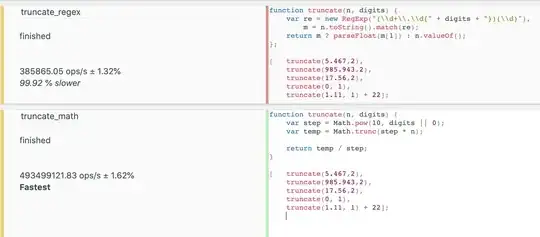

I could then estimate a model with PC1 as covariate. Yet, in that case, it would be difficult to interpret what the variation in PC1 substatial means when using model_profile since everything would be collapsed into one component.

model_profile(explainer_xgb) %>% plot()

Thus, my key question is: how can I look at the feature importance of components without compromising on the interpretability of the partial dependence plot?