I am trying to build a big dataframe using a function that takes some arguments and return partial dataframes. I have a long list of documents to parse and extract relevant information that will go the big dataframe, and I am trying to do it using multiprocessing.Pool to make the process go faster.

My code looks like this :

from multiprocessing import *

from settings import *

server_url = settings.SERVER_URL_UAT

username = settings.USERNAME

password = settings.PASSWORD

def wrapped_visit_detail(args):

global server_url

global username

global password

# visit_detail return a dataframe after consuming args

return visit_detail(server_url, args, username, password)

# Trying to pass a list of arguments to wapped_visit_detail

visits_url = [doc1, doc2, doc3, doc4]

df = pd.DataFrame()

pool = Pool(cpu_count())

df = pd.concat( [ df,

pool.map( wrapped_visit_detail,

visits_url

)

],

ignore_index = True

)

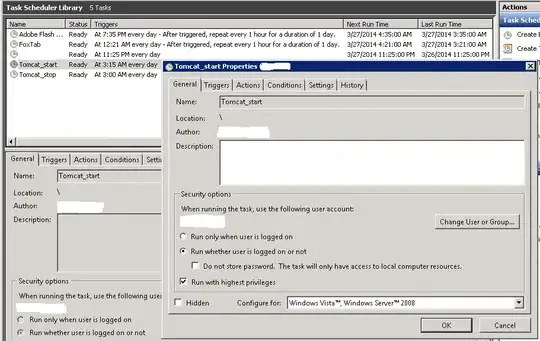

When I run this, I got this error

multiprocessing.pool.MaybeEncodingError: Error sending result: '<multiprocessing.pool.ExceptionWithTraceback object at 0x7f2c88a43208>'. Reason: 'TypeError("can't pickle _thread._local objects",)'

EDIT

To illustrate my problem I created this simple figure

This is painfully slow and not scalable at all

And I am looking to make the code not serial but rather as parallelized as possible

Thank you all for your great comments so far, yes, I a using shared variable as parameters to this function that pulls the files and extract the individuals dataframes, it seems ot be my issue indeed

I am suspecting something wrong in the way I call pool.map()

Any tip would be really welcome