Issue: I made a wheel out of a very basic module, installed it on a Databricks cluster. When creating a job of type 'Python wheel', the job fails to run because it cannot find the package.

The setup is very simple. I have a source code folder:

src

|-app_1

|- __init__.py

|- main.py

Where main.py contains:

def func():

print('Hello world!')

Then, I do the following:

Build

srcas wheelsdemo-0.0.0-py3-none-any.whl.Install

demo-0.0.0-py3-none-any.whlin the Databricks cluster. I then validate that the wheel was built and installed correctly. I know this because I am able to runfrom app_1.main import func, then callingfuncsucceeds. This is the only wheel installed in the cluster.Create a job of type Python wheel, then set package name as

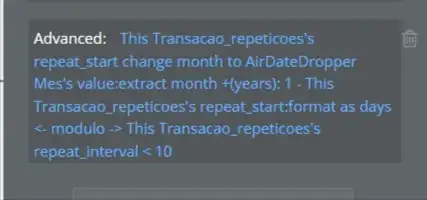

app_1and entrypoint asmain.func. When I run the job, I get an error thatapp_1cannot be found.