I just had to use pg_restore with a small dump of 30MB and it took in average 5 minutes! On my colleagues' computers, it is ultra fast, like a dozen of seconds. The difference between the two is the CPU usage: while for the others, the database uses quite a bunch of CPU (60-70%) during the restore operation, on my machine, it stays around a few percents only (0-3%) as if it was not active at all.

The exact command was : pg_restore -h 127.0.0.1 --username XXX --dbname test --no-comments test_dump.sql

The originating command to produce this dump was: pg_dump --dbname=XXX --user=XXX --no-owner --no-privileges --verbose --format=custom --file=/sql/test_dump.sql

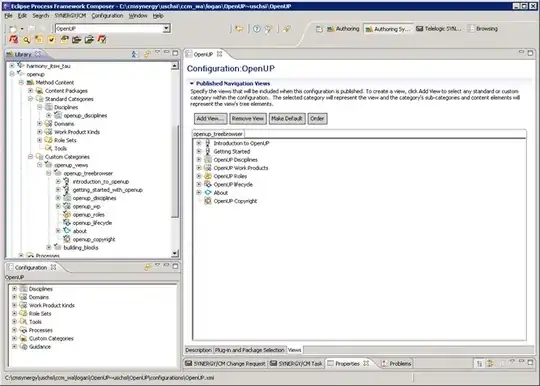

Look at the screenshot taken in the middle of the restore operation:

Here is the corresponding vmstat 1 result running the command:

I've looked at the web for a solution during a few hours but this under-usage of the CPU remains quite mysterious. Any idea will be appreciated.

For the stack, I am on Ubuntu 20.04 and postgres version 13.6 is running into a docker container. I have a decent hardware, neither bad nor great.

EDIT: This very same command worked in the past on my machine with a same common HDD but now it is terribly slow. The only difference I saw with others (for whom it is blazing fast) was really on the CPU-usage from my point of view (even if they have an SSD which shouldn't be at all the limiting factor especially with a 30 MB dump).

EDIT 2: For those who proposed the problem was about IO-boundness and maybe a slow disk, I just tried without any conviction to run my command on an SSD partition I just made and nothing has changed.