I am a bit annoyed that the ENTSO-E only provides XML responses and no JSON formats for their API. Does anyone have experience with converting text/xml responses to dataframes in R? I usually use

fromJSON(rawToChar(response$content))

from the jsonlite and httr library. So far I never had to convert XML. I tried a few things from the XML library but with no success. All tutorials I found focus on using xml webfiles. In my case I only want the raw content data of the api response to be converted.

The response looks like this:

Response [the.api.I.used] Date: 2022-03-10 17:53 Status: 200 Content-Type: text/xml Size: 1.09 MB

if I use

content(response, as = "text")

I get this:

So I have something like position and quantity.

if I use this result in

xmlParse(content(response, as = "text"))

I get this output:

How do I make a dataframe out of it? Is there a function similar to fromJSON for XML? If you need a minimal working code example I can provide one with a public API but for this very example a private token is required.

thanks and best, Johannes

Solution:

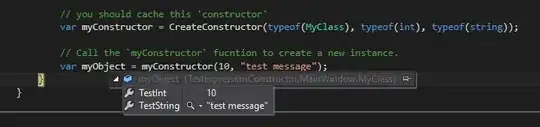

response_example = httr::GET(url)

content_example <- httr::content(response_example, encoding = "UTF-8")

content_list_example <- xml2::as_list(content_example)

timeseries_example <- content_list_example$GL_MarketDocument[names(content_list_example$GL_MarketDocument) == "TimeSeries"] *"GL_MarketDocument" and "TimeSeries" is specific to my API response*

values <- as.numeric(unlist(purrr::map(ts$TimeSeries$Period, "quantity")))