I would like to harness the speed and power of Apache Kafka to replace REST calls in my Java application.

My app has the following architecture:

Producer P1 writes a search query to topic search

Consumer C1 reads/consumes the search query and produces search results which it writes to another topic search_results.

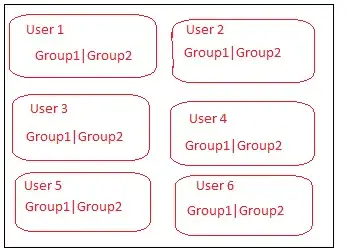

Both Producer P1 and Consumer C1 are part of a group of producers/consumer living on different physical machines. I need the Producer P1 server to be the one to consume/read the search results output produced by Consumer C1 so it can serve the search results to the client who submitted the search query.

The above example was simplified for demonstration purposes - in reality the process entails several additional intermediate Producers and Consumers where the query may be thrown to/from multiple servers to be processed. The main point is that the value produced by the last Producer needs to be read/consumed by the first Producer.

In the typical Apache Kafka architecture, there's no way to ensure that the final output is read by the server that originally produced the search query - as there are multiple servers reading the same topic.

I do not want to use REST for this purpose because it is very sloooooow when processing thousands of queries. Apache Kafka can handle millions of queries with 10 millisecond latency. In my particular use case it is critical that the query is transmitted with sub-millisecond speed. Scaling with REST is also much more difficult. Suppose our traffic increases and we need to add a dozen more servers to intercept client queries. With Apache Kafka it's as simple as adding new servers and adding them to the Producer P1 group. With REST not so simple. Apache Kafka also provides a very high level of decoupling which REST does not.

What design/architecture can be used to force a specific server/produce to consume the end result of initial query?

Thanks