I have source (src) image(s) I wish to align to a destination (dst) image using an Affine Transformation whilst retaining the full extent of both images during alignment (even the non-overlapping areas).

I am already able to calculate the Affine Transformation rotation and offset matrix, which I feed to scipy.ndimage.interpolate.affine_transform to recover the dst-aligned src image.

The problem is that, when the images are not fuly overlapping, the resultant image is cropped to only the common footprint of the two images. What I need is the full extent of both images, placed on the same pixel coordinate system. This question is almost a duplicate of this one - and the excellent answer and repository there provides this functionality for OpenCV transformations. I unfortunately need this for scipy's implementation.

Much too late, after repeatedly hitting a brick wall trying to translate the above question's answer to scipy, I came across this issue and subsequently followed to this question. The latter question did give some insight into the wonderful world of scipy's affine transformation, but I have as yet been unable to crack my particular needs.

The transformations from src to dst can have translations and rotation. I can get translations only working (an example is shown below) and I can get rotations only working (largely hacking around the below and taking inspiration from the use of the reshape argument in scipy.ndimage.interpolation.rotate). However, I am getting thoroughly lost combining the two. I have tried to calculate what should be the correct offset (see this question's answers again), but I can't get it working in all scenarios.

Translation-only working example of padded affine transformation, which follows largely this repo, explained in this answer:

from scipy.ndimage import rotate, affine_transform

import numpy as np

import matplotlib.pyplot as plt

nblob = 50

shape = (200, 100)

buffered_shape = (300, 200) # buffer for rotation and translation

def affine_test(angle=0, translate=(0, 0)):

np.random.seed(42)

# Maxiumum translation allowed is half difference between shape and buffered_shape

# Generate a buffered_shape-sized base image with random blobs

base = np.zeros(buffered_shape, dtype=np.float32)

random_locs = np.random.choice(np.arange(2, buffered_shape[0] - 2), nblob * 2, replace=False)

i = random_locs[:nblob]

j = random_locs[nblob:]

for k, (_i, _j) in enumerate(zip(i, j)):

# Use different values, just to make it easier to distinguish blobs

base[_i - 2 : _i + 2, _j - 2 : _j + 2] = k + 10

# Impose a rotation and translation on source

src = rotate(base, angle, reshape=False, order=1, mode="constant")

bsc = (np.array(buffered_shape) / 2).astype(int)

sc = (np.array(shape) / 2).astype(int)

src = src[

bsc[0] - sc[0] + translate[0] : bsc[0] + sc[0] + translate[0],

bsc[1] - sc[1] + translate[1] : bsc[1] + sc[1] + translate[1],

]

# Cut-out destination from the centre of the base image

dst = base[bsc[0] - sc[0] : bsc[0] + sc[0], bsc[1] - sc[1] : bsc[1] + sc[1]]

src_y, src_x = src.shape

def get_matrix_offset(centre, angle, scale):

"""Follows OpenCV.getRotationMatrix2D"""

angle = angle * np.pi / 180

alpha = scale * np.cos(angle)

beta = scale * np.sin(angle)

return (

np.array([[alpha, beta], [-beta, alpha]]),

np.array(

[

(1 - alpha) * centre[0] - beta * centre[1],

beta * centre[0] + (1 - alpha) * centre[1],

]

),

)

# Obtain the rotation matrix and offset that describes the transformation

# between src and dst

matrix, offset = get_matrix_offset(np.array([src_y / 2, src_x / 2]), angle, 1)

offset = offset - translate

# Determine the outer bounds of the new image

lin_pts = np.array([[0, src_x, src_x, 0], [0, 0, src_y, src_y]])

transf_lin_pts = np.dot(matrix.T, lin_pts) - offset[::-1].reshape(2, 1)

# Find min and max bounds of the transformed image

min_x = np.floor(np.min(transf_lin_pts[0])).astype(int)

min_y = np.floor(np.min(transf_lin_pts[1])).astype(int)

max_x = np.ceil(np.max(transf_lin_pts[0])).astype(int)

max_y = np.ceil(np.max(transf_lin_pts[1])).astype(int)

# Add translation to the transformation matrix to shift to positive values

anchor_x, anchor_y = 0, 0

if min_x < 0:

anchor_x = -min_x

if min_y < 0:

anchor_y = -min_y

shifted_offset = offset - np.dot(matrix, [anchor_y, anchor_x])

# Create padded destination image

dst_h, dst_w = dst.shape[:2]

pad_widths = [anchor_y, max(max_y, dst_h) - dst_h, anchor_x, max(max_x, dst_w) - dst_w]

dst_padded = np.pad(

dst,

((pad_widths[0], pad_widths[1]), (pad_widths[2], pad_widths[3])),

"constant",

constant_values=-1,

)

dst_pad_h, dst_pad_w = dst_padded.shape

# Create the aligned and padded source image

source_aligned = affine_transform(

src,

matrix.T,

offset=shifted_offset,

output_shape=(dst_pad_h, dst_pad_w),

order=3,

mode="constant",

cval=-1,

)

# Plot the images

fig, axes = plt.subplots(1, 4, figsize=(10, 5), sharex=True, sharey=True)

axes[0].imshow(src, cmap="viridis", vmin=-1, vmax=nblob)

axes[0].set_title("Source")

axes[1].imshow(dst, cmap="viridis", vmin=-1, vmax=nblob)

axes[1].set_title("Dest")

axes[2].imshow(source_aligned, cmap="viridis", vmin=-1, vmax=nblob)

axes[2].set_title("Source aligned to Dest padded")

axes[3].imshow(dst_padded, cmap="viridis", vmin=-1, vmax=nblob)

axes[3].set_title("Dest padded")

plt.show()

e.g.:

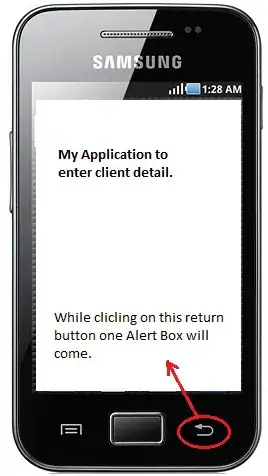

affine_test(0, (-20, 40))

gives:

With a zoom in showing the aligned in the padded images:

I require the full extent of the src and dst images aligned on the same pixel coordinates, with both rotations and translations.

Any help is greatly appreciated!