I have a question about the performance of openpyxl when reading files.

I am trying to read the same xlsx file using ProcessPoolExecutor, single file Maybe 500,000 to 800,000 rows.

In read-only mode calling sheet.iter_rows(), when not using ProcessPoolExecutor, reading the entire worksheet, it takes about 1s to process 10,000 rows of data. But when I set the max_row and min_row parameters with ProcessPoolExecutor, it is different.

totalRows: 200,000

1 ~ 10000 take 1.03s

10001 ~ 20000 take 1.73s

20001 ~ 30000 take 2.41s

30001 ~ 40000 take 3.27s

40001 ~ 50000 take 4.06s

50001 ~ 60000 take 4.85s

60001 ~ 70000 take 5.93s

70001 ~ 80000 take 6.64s

80001 ~ 90000 take 7.72s

90001 ~ 100000 take 8.18s

100001 ~ 110000 take 9.42s

110001 ~ 120000 take 10.04s

120001 ~ 130000 take 10.61s

130001 ~ 140000 take 11.17s

140001 ~ 150000 take 11.52s

150001 ~ 160000 take 12.48s

160001 ~ 170000 take 12.52s

170001 ~ 180000 take 13.01s

180001 ~ 190000 take 13.25s

190001 ~ 200000 take 13.46s

total: take 33.54s

Obviously, just looking at the results of each process, the time consumed is indeed less. But the overall time consumption has increased. And the further back the scope, the more time each process consumes. Read 200,000 rows with a single process only takes about 20s.

I'm not very clear with iterators and haven't looked closely at the source code of openpyxl. From the time consumption, even if the range is set, the iterator still needs to start processing from row 1, I don't know if this is the case.

I'm not a professional programmer, if you happen to have relevant experience, please try to be as simple as possible

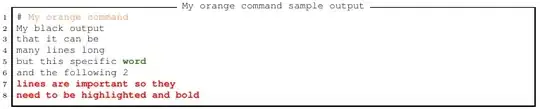

codes here!!!

import openpyxl

from time import perf_counter

from concurrent.futures import ProcessPoolExecutor

def read(file, minRow, maxRow):

start = perf_counter()

book = openpyxl.load_workbook(filename=file, read_only=True, keep_vba=False, data_only=True, keep_links=False)

sheet = book.worksheets[0]

val = [[cell.value for cell in row] for row in sheet.iter_rows(min_row=minRow, max_row=maxRow)]

book.close()

end = perf_counter()

print(f'{minRow} ~ {maxRow}', 'take {0:.2f}s'.format(end-start))

return val

def parallel(file: str, rowRanges: list[tuple]):

futures = []

with ProcessPoolExecutor(max_workers=6) as pool:

for minRow, maxRow in rowRanges:

futures.append(pool.submit(read, file, minRow, maxRow))

return futures

if __name__ == '__main__':

file = '200000.xlsx'

start = perf_counter()

tasks = getRowRanges(file)

parallel(file, tasks)

end = perf_counter()

print('total: take {0:.2f}s'.format(end-start))